Python & OpenGL for Scientific Visualization

Copyright (c) 2018 - Nicolas P. Rougier <Nicolas.Rougier@inria.fr>

Preface

.

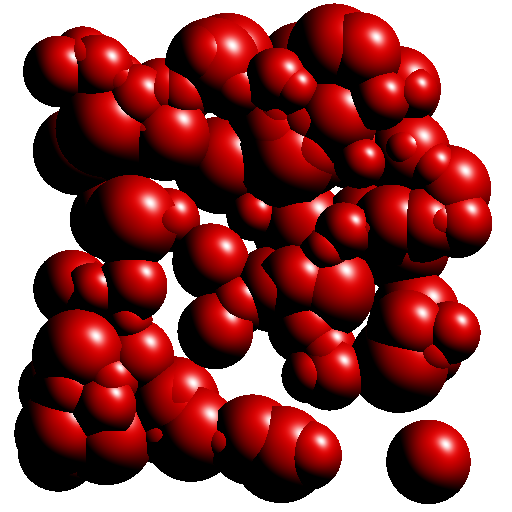

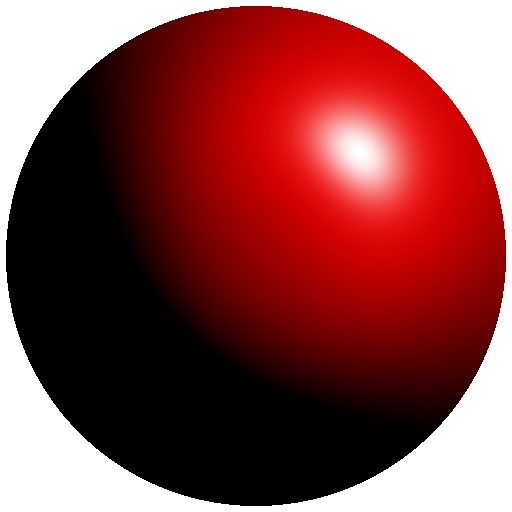

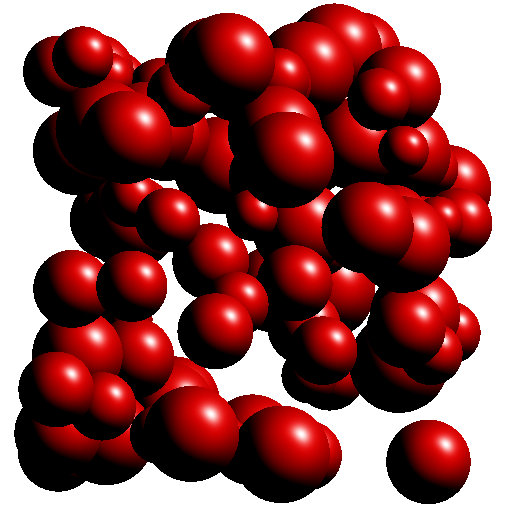

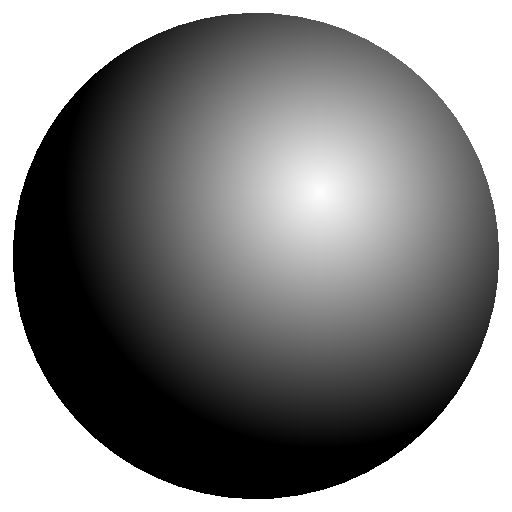

This book is open-access (i.e. it's free to read at this address) because I believe knowledge should be free. However, if you think the book is worth a few dollars, you can give me a few euros (5€ or 10€). This money will help me to travel to Python conferences and to write other books as well. If you don't have money, it's fine. Just enjoy the book and spread the word about it. The teaser image above comes from the artwork section of my website. It has been made some years ago using the Povray (Persistence of Vision) raytracer. I like it very much because it is a kind of résumé of my research.

About the author

I am a full-time research scientist at Inria which is the French national institute for research in computer science and control. This is a public scientific and technological establishment (EPST) under the double supervision of the Research & Education Ministry, and the Ministry of Economy Finance and Industry. I'm working within the Mnemosyne project which lies at the frontier between integrative and computational neuroscience in association with the Institute of Neurodegenerative Diseases, the Bordeaux laboratory for research in computer science (LaBRI), the University of Bordeaux and the national center for scientific research (CNRS).

I've been using Python for more than 15 years and numpy for more than 10 years for modeling in neuroscience, machine learning and for advanced visualization (OpenGL). I'm the author of several online resources and tutorials (Matplotlib, numpy, OpenGL) and I've been teaching Python, numpy and scientific visualization at the University of Bordeaux and in various conferences and schools worldwide (SciPy, EuroScipy, etc). I'm also the author of the popular article Ten Simple Rules for Better Figures , a popular matplotlib tutorial and an open access book From Python To Numpy.

About this book

This book has been written in restructured text format and generated using a customized version of the docutils rst2html.py command line (available from the docutils python package) and a custom template.

If you want to rebuild the html output, from the top directory, type:

$ ./rst2html.py --link-stylesheet \

--cloak-email-addresses \

--toc-top-backlinks \

--stylesheet book.css \

--stylesheet-dirs . \

book.rst book.html

Or you use the provided make.sh shell script.

The sources are available from https://github.com/rougier/python-opengl.

Last point, I wrote the book in a kind of modern Kerouac's style such that you can download it once and continue reading it offline. Initial loading may be slow though.

Prerequisites

This is not a Python nor a NumpPy beginner guide and you should have an intermediate level in both Python and NumPy. No prior knowledge of OpenGL is necessary because I'll explain everything.

Conventions

I will use usual naming conventions. If not stated explicitly, each script should import numpy, scipy and glumpy as:

import numpy as np

We'll use up-to-date versions (at the date of writing, i.e. August, 2017) of the different packages:

| Packages | Version |

|---|---|

| Python | 3.6.0 |

| Numpy | 1.12.0 |

| Scipy | 0.18.1 |

| Cython | 0.25.2 |

| Triangle | 20170106 |

| Glumpy | 1.0.6 |

How to contribute

If you want to contribute to this book, you can:

- Report issues (https://github.com/rougier/python-opengl/issues)

- Suggest improvements (https://github.com/rougier/python-opengl/pulls)

- Correct English (https://github.com/rougier/python-opengl/issues)

- Star the project (https://github.com/rougier/python-opengl)

- Suggest a more responsive design for the HTML Book

- Spread the word about this book (Reddit, Hacker News, etc.)

Publishing

If you're an editor interested in publishing this book, you can contact me if you agree to have this version and all subsequent versions open access (i.e. online at this address), you know how to deal with restructured text (Word is not an option), you provide a real added-value as well as supporting services, and more importantly, you have a truly amazing latex book template (and be warned that I'm a bit picky about typography & design: Edward Tufte is my hero). Still here?

License

Book

This work is licensed under a Creative Commons Attribution-Non Commercial-Share Alike 4.0 International License. You are free to:

- Share — copy and redistribute the material in any medium or format

- Adapt — remix, transform, and build upon the material

The licensor cannot revoke these freedoms as long as you follow the license terms.

Under the following terms:

- Attribution — You must give appropriate credit, provide a link to the license, and indicate if changes were made. You may do so in any reasonable manner, but not in any way that suggests the licensor endorses you or your use.

- NonCommercial — You may not use the material for commercial purposes.

- ShareAlike — If you remix, transform, or build upon the material, you must distribute your contributions under the same license as the original.

Code

The code is licensed under the OSI-approved BSD 2-Clause License.

Introduction

.

Before diving into OpenGL programming, it is important to have a look at the whole GL landscape because it is actually quite complex and you can easily lose yourself between the different actors, terms and definitions. The teaser image above shows the face of a character from the Wolfenstein game, one from 1992 and the other from 2015. You can see that computer graphics has evolved a lot in 25 years.

A bit of history

OpenGL is 25 years old! Since the first release in 1992, a lot has happened (and is still happening actually, with the newly released Vulkan API and the 4.6 GL release) and consequently, before diving into the book, it is important to understand OpenGL API evolution over the years. If the first API (1.xx) has not changed too much in the first twelve years, a big change occurred in 2004 with the introduction of the dynamic pipeline (OpenGL 2.x), i.e. the use of shaders that allow to have direct access to the GPU. Before this version, OpenGL was using a fixed pipeline that made it easy to rapidly prototype some ideas. It was simple but not very powerful because the user had not much control over the graphic pipeline. This is the reason why it has been deprecated more than ten years ago and you don't want to use it today. Problem is that there are a lot of tutorials online that still use this fixed pipeline and because most of them were written before modern GL, they're not even aware (and cannot) that they use a deprecated API.

How to know if a tutorial address the fixed pipeline ? It's relatively easy. It'll contain GL commands such as:

glVertex, glColor, glLight, glMaterial, glBegin, glEnd, glMatrix, glMatrixMode, glLoadIdentity, glPushMatrix, glPopMatrix, glRect, glPolygonMode, glBitmap, glAphaFunc, glNewList, glDisplayList, glPushAttrib, glPopAttrib, glVertexPointer, glColorPointer, glTexCoordPointer, glNormalPointer, glRotate, glTranslate, glScale, glMatrixMode, glCall,

If you see any of them in a tutorial, run away because it it's most certainly a tutorial that address the fixed pipeline and you don't want to read it because what you will learn is already useless. If you look at the GL history below, you'll realize that the "modern" GL API is already 13 years old while the fixed pipeline has been deprecated more than 10 years ago.

1 2 Modern 2

9 0 OpenGL 0 Vulkan

9 0 ↓ 1 ↓

2 3 4 5 6 7 8 9 0 1 2 3 4 5 6 7 8 9 0 1 2 3 4 5 6 7

─────────────────────────────────────┬───────────────────────────────────┬────

OpenGL 3.3

4.0

3.1

1.2 1.4 2.0 3.2 4.2 4.4

1.0 1.1 1.3 1.5 2.1 3.0 4.1 4.3 4.5 4.6

╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌┬╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌

Fixed pipeline ╎ Programmable pipeline

╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌┴╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌

GLES ╭─────╮ 3.2

1.0 │ 2.0 │ 3.0 3.1

╰─────╯

╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌

GLSL 4.0 4.3 4.5 4.6

1.3 4.1 4.4

1.4 4.2

1.1 1.2 1.5

╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌

WebGL 1.0 2.0

╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌╌

Vulkan 1.0

──────────────────────────────────────────────────────────────────────────────

From OpenGL wiki: In 1992, Mark Segal and Kurt Akeley authored the OpenGL 1.0 specification which formalized a graphics API and made cross platform 3rd party implementation and support viable. In 2004, OpenGL 2.0 incorporated the significant addition of the OpenGL Shading Language (also called GLSL), a C like language with which the transformation and fragment shading stages of the pipeline can be programmed. In 2008, OpenGL 3.0 added the concept of deprecation: marking certain features as subject to removal in later versions.

- Open Graphics Library (OpenGL)

- OpenGL is a cross-language, cross-platform application programming interface (API) for rendering 2D and 3D vector graphics. The API is typically used to interact with a graphics processing unit (GPU), to achieve hardware-accelerated rendering.

- OpenGL for Embedded Systems (OpenGL ES or GLES)

- GLES is a subset of the OpenGL computer graphics rendering application programming interface (API) for rendering 2D and 3D computer graphics such as those used by video games, typically hardware-accelerated using a graphics processing unit (GPU).

- OpenGL Shading Language (GLSL)

- GLSL is a high-level shading language with a syntax based on the C programming language. It was created by the OpenGL ARB (OpenGL Architecture Review Board) to give developers more direct control of the graphics pipeline

- Web Graphics Library (WebGL)

- WebGL is a JavaScript API for rendering 3D graphics within any compatible web browser without the use of plug-ins. WebGL is integrated completely into all the web standards of the browser allowing GPU accelerated usage of physics and image processing and effects as part of the web page canvas.

- Vulkan (VK)

- Vulkan is a low-overhead, cross-platform 3D graphics and compute API first announced at GDC 2015 by the Khronos Group. Like OpenGL, Vulkan targets high-performance realtime 3D graphics applications such as video games and interactive media across all platforms, and can offer higher performance and more balanced CPU/GPU usage, much like Direct3D 12 and Mantle.

For this book, we'll use the GLES 2.0 API that allows to use the modern GL API while staying relatively simple. For comparison, have a look at the table below that gives the number of functions and constants for each version of the GL API. Note that once you'll master the GLES 2.0, it's only a matter of reading the documentation to take advantage of more advanced version because the core concepts remain the same (which is not the case for the new Vulkan API).

Note

The number of functions and constants have been computed using the code/chapter-02/registry.py program that parses the gl.xml file that defines the OpenGL and OpenGL API Registry

| Version | Constants | Functions | Version | Constants | Functions | |

|---|---|---|---|---|---|---|

| GL 1.0 | 0 | 306 | GL 3.2 | 800 | 316 | |

| GL 1.1 | 528 | 336 | GL 3.3 | 816 | 344 | |

| GL 1.2 | 569 | 340 | GL 4.0 | 894 | 390 | |

| GL 1.3 | 665 | 386 | GL 4.1 | 929 | 478 | |

| GL 1.4 | 713 | 433 | GL 4.2 | 1041 | 490 | |

| GL 1.5 | 763 | 452 | GL 4.3 | 1302 | 534 | |

| GL 2.0 | 847 | 545 | GL 4.4 | 1321 | 543 | |

| GL 2.1 | 870 | 551 | GL 4.5 | 1343 | 653 | |

| GL 3.0 | 1104 | 635 | GLES 1.0 | 333 | 106 | |

| GL 3.1 | 1165 | 647 | GLES 2.0 | 301 | 142 |

Modern OpenGL

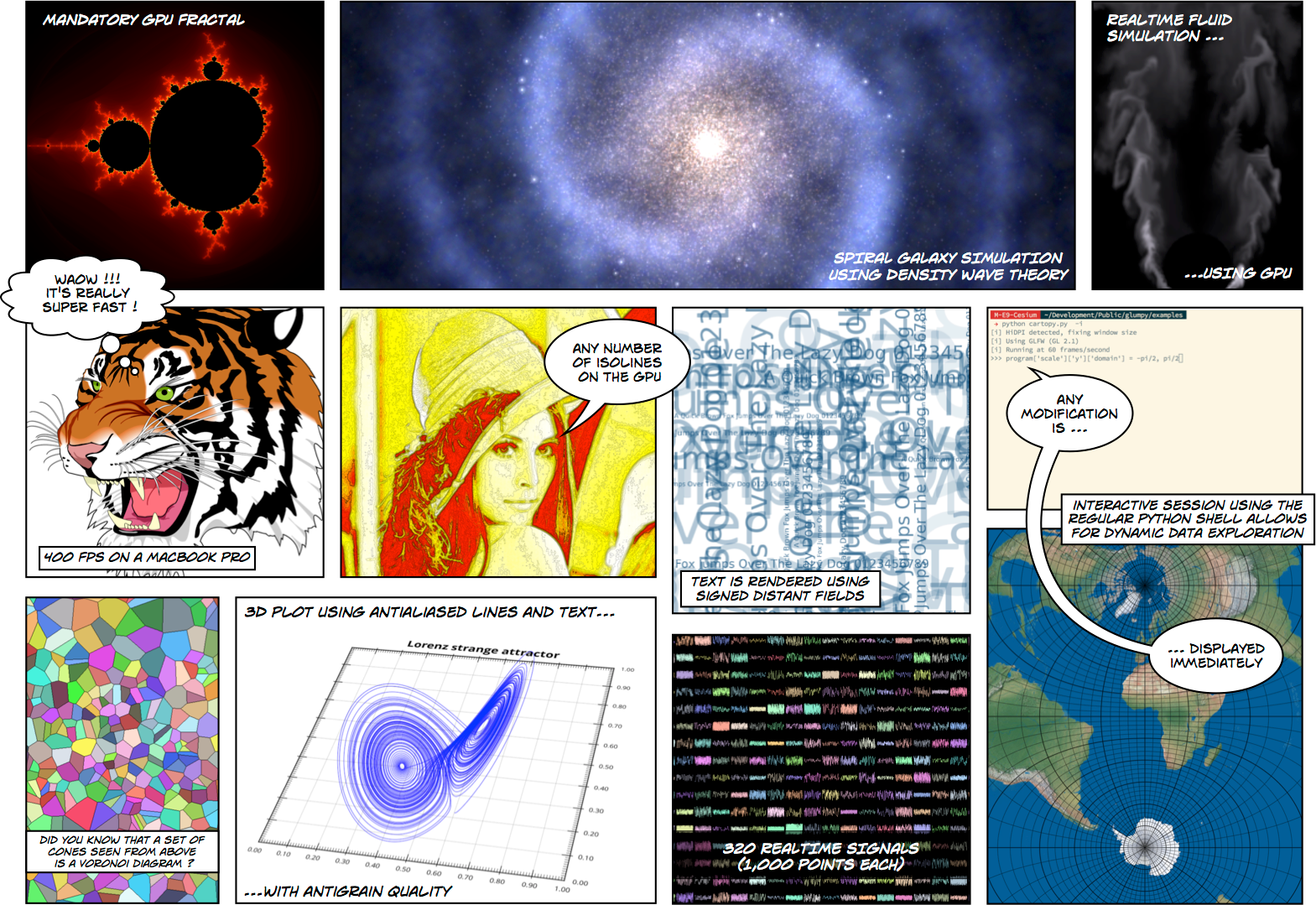

The graphic pipeline

Note

The shader language is called glsl. There are many versions that goes from 1.0 to 1.5 and subsequent version get the number of OpenGL version. Last version is 4.6 (June 2017).

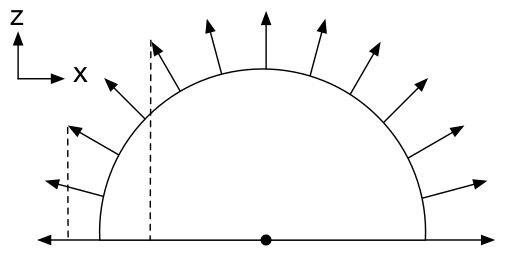

If you want to understand modern OpenGL, you have to understand the graphic pipeline and shaders. Shaders are pieces of program (using a C-like language) that are build onto the GPU and executed during the rendering pipeline. Depending on the nature of the shaders (there are many types depending on the version of OpenGL you're using), they will act at different stage of the rendering pipeline. To simplify this tutorial, we'll use only vertex and fragment shaders as shown below:

A vertex shader acts on vertices and is supposed to output the vertex

position (gl_Position) on the viewport (i.e. screen). A fragment shader

acts at the fragment level and is supposed to output the color

(gl_FragColor) of the fragment. Hence, a minimal vertex shader is:

void main() { gl_Position = vec4(0.0,0.0,0.0,1.0); }

while a minimal fragment shader would be:

void main() { gl_FragColor = vec4(0.0,0.0,0.0,1.0); }

These two shaders are not very useful because the first shader will always

output the null vertex (gl_Position is a special variable) while the second

will only output the black color for any fragment (gl_FragColor is also a

special variable). We'll see later how to make them to do more useful things.

One question remains: when are those shaders executed exactly ? The vertex shader is executed for each vertex that is given to the rendering pipeline (we'll see what does that mean exactly later) and the fragment shader is executed on each fragment (= pixel) that is generated after the vertex stage. For example, in the simple figure above, the vertex would be called 3 times, once for each vertex (1,2 and 3) while the fragment shader would be executed 21 times, once for each fragment.

Buffers

The next question is thus where do those vertices comes from ? The idea of modern GL is that vertices are stored on the CPU and need to be uploaded to the GPU before rendering. The way to do that is to build buffers onto the CPU and to send these buffers onto the GPU. If your data does not change, no need to upload them again. That is the big difference with the previous fixed pipeline where data were uploaded at each rendering call (only display lists were built into GPU memory).

But what is the structure of a vertex ? OpenGL does not assume anything about your vertex structure and you're free to use as many information you may need for each vertex. The only condition is that all vertices from a buffer have the same structure (possibly with different content). This again is a big difference with the fixed pipeline where OpenGL was doing a lot of complex rendering stuff for you (projections, lighting, normals, etc.) with an implicit fixed vertex structure. The good news is that you're now free to do anything you want, but the bad news is that you have to program just everything.

Let's take a simple example of a vertex structure where we want each vertex to hold a position and a color. The easiest way to do that in python is to use a structured array using numpy:

data = np.zeros(4, dtype = [ ("position", np.float32, 3), ("color", np.float32, 4)] )

We just created a CPU buffer with 4 vertices, each of them having a

position (3 floats for x,y,z coordinates) and a color (4 floats for

red, blue, green and alpha channels). Note that we explicitly chose to have 3

coordinates for position but we may have chosen to have only 2 if were to

work in two-dimensions. Same holds true for color. We could have used

only 3 channels (r,g,b) if we did not want to use transparency. This would save

some bytes for each vertex. Of course, for 4 vertices, this does not really

matter but you have to realize it will matter if your data size grows up to

one or ten million vertices.

Variables

Now, we need to explain our shaders what to do with these buffers and how to connect them together. So, let's consider again a CPU buffer of 4 vertices using 2 floats for position and 4 floats for color:

data = np.zeros(4, dtype = [ ("position", np.float32, 2), ("color", np.float32, 4)] )

We need to tell the vertex shader that it will have to handle vertices where a position is a tuple of 2 floats and color is a tuple of 4 floats. This is precisely what attributes are meant for. Let us change slightly our previous vertex shader:

attribute vec2 position; attribute vec4 color; void main() { gl_Position = vec4(position, 0.0, 1.0); }

This vertex shader now expects a vertex to possess 2 attributes, one named

position and one named color with specified types (vec2 means tuple of

2 floats and vec4 means tuple of 4 floats). It is important to note that even

if we labeled the first attribute position, this attribute is not yet bound

to the actual position in the numpy array. We'll need to do it explicitly

at some point in our program and there is no magic that will bind the numpy

array field to the right attribute, you'll have to do it yourself, but we'll

see that later.

The second type of information we can feed the vertex shader is the uniform

that may be considered as constant value (across all the vertices). Let's say

for example we want to scale all the vertices by a constant factor scale,

we would thus write:

uniform float scale; attribute vec2 position; attribute vec4 color; void main() { gl_Position = vec4(position*scale, 0.0, 1.0); }

Last type is the varying type that is used to pass information between the vertex stage and the fragment stage. So let us suppose (again) we want to pass the vertex color to the fragment shader, we now write:

uniform float scale; attribute vec2 position; attribute vec4 color; varying vec4 v_color; void main() { gl_Position = vec4(position*scale, 0.0, 1.0); v_color = color; }

and then in the fragment shader, we write:

varying vec4 v_color; void main() { gl_FragColor = v_color; }

The question is what is the value of v_color inside the fragment shader ?

If you look at the figure that introduced the gl pipeline, we have 3 vertices

and 21 fragments. What is the color of each individual fragment ?

The answer is the interpolation of all 3 vertices color. This interpolation is made using the distance of the fragment to each individual vertex. This is a very important concept to understand. Any varying value is interpolated between the vertices that compose the elementary item (mostly, line or triangle).

Ok, enough for now, we'll see an explicit example in the next chapter.

State of the union

Last, but not least, we need to access the OpenGL library from within Python and we have mostly two solutions at our disposal. Either we use pure bindings and we have to program everything (see next chapter) or we use an engine that provide a lot of convenient functions that ease the development. We'll first use the PyOpenGL bindings before using the glumpy library that offers a tight integration with numpy.

Bindings

- Pyglet is a pure python cross-platform application framework intended for game development. It supports windowing, user interface event handling, OpenGL graphics, loading images and videos and playing sounds and music. It works on Windows, OS X and Linux.

- PyOpenGL is the most common cross platform Python binding to OpenGL and related APIs. The binding is created using the standard ctypes library, and is provided under an extremely liberal BSD-style Open-Source license.

- ModernGL is a wrapper over OpenGL that simplifies the creation of simple graphics applications like scientific simulations, small games or user interfaces. Usually, acquiring in-depth knowledge of OpenGL requires a steep learning curve. In contrast, ModernGL is easy to learn and use, moreover it is capable of rendering with the same performance and quality, with less code written.

- Ctypes bindings can also be generated quite easily thanks to the gl.xml file provided by the Khronos group that defines the OpenGL and OpenGL API Registry. The number of functions and constants given in the table above have been computed using the code/chapter-02/registry.py program that parses the gl.xml file for each API and version and count the relevant features.

Engines

- The Visualization Toolkit (VTK) is an open-source, freely available software system for 3D computer graphics, image processing, and visualization. It consists of a C++ class library and several interpreted interface layers including Tcl/Tk, Java, and Python.

- Processing is a programming language, development environment, and online community. Since 2001, Processing has promoted software literacy within the visual arts and visual literacy within technology. Today, there are tens of thousands of students, artists, designers, researchers, and hobbyists who use Processing for learning, prototyping, and production.

- NodeBox for OpenGL is a free, cross-platform library for generating 2D animations with Python programming code. It is built on Pyglet and adopts the drawing API from NodeBox for Mac OS X. It has built-in support for paths, layers, motion tweening, hardware-accelerated image effects, simple physics and interactivity.

- Panda3D is a 3D engine: a library of subroutines for 3D rendering and game development. The library is C++ with a set of Python bindings. Game development with Panda3D usually consists of writing a Python or C++ program that controls the Panda3D library.

- VPython makes it easy to create navigable 3D displays and animations, even for those with limited programming experience. Because it is based on Python, it also has much to offer for experienced programmers and researchers.

Libraries

Note

Even though glumpy and vispy share a number of concepts, they are different. vispy offers a high-level interface that may be convenient in some situations but this tends to hide the internal machinery. This is one of the reasons we'll be using glumpy instead (the other reason being that I'm the author of glumpy (and one of the authors of vispy as well in fact)).

- Glumpy is a python library for scientific visualization that is both fast, scalable and beautiful. Glumpy leverages the computational power of modern Graphics Processing Units (GPUs) through the OpenGL library to display very large datasets and offers an intuitive interface between numpy and modern OpenGL. We'll use it extensively in this book.

- Vispy is the sister project of glumpy. It is a high-performance interactive 2D/3D data visualization library and offer a high-level interface for scientific visualization. The difference between glumpy and vispy is approximately the same as the difference between numpy and scipy even though vispy is independent of glumpy and vice-versa.

Quickstart

.

For the really impatient, you can try to run the code in the teaser image above. If this works, a window should open on your desktop with a red color in the background. If you now want to understand how this works, you'll have to read the text below.

Preliminaries

The main difficulty for newcomers in programming modern OpenGL is that it requires to understand a lot of different concepts at once and then, to perform a lot of operations before rendering anything on screen. This complexity implies that there are many places where your code can be wrong, both at the conceptual and code level. To illustrate this difficulty, we'll program our first OpenGL program using the raw interface and our goal is to display a simple colored quad (i.e. a red square).

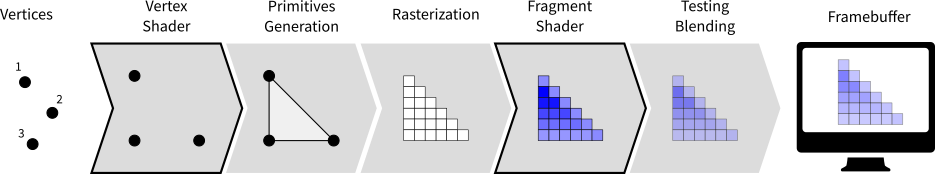

Normalize Device Coordinates

Figure

Before even diving into actual code, it is important to understand first how

OpenGL handles coordinates. More precisely, OpenGL considers only coordinates

(x,y,z) that fall into the space where -1 ≤ x,y,z ≤ +1. Any coordinates

that are outside this range will be discarded or clipped (i.e. won't be visible

on screen). This is called Normalized Device Coordinates, or NDC for short.

This is something you cannot change because it is part of the OpenGL API and

implemented in your hardware (GPU). Consequently, even if you intend to render

the whole universe, you'll have utlimately to fit it into this small volume.

The second important fact to know is that x coordinates increase from left to right and y coordinates increase from bottom to top. For this latter one, it is noticeably different from the usual convention and this might induce some problems, especially when you're dealing with the mouse pointer whose y coordinate goes the other way around.

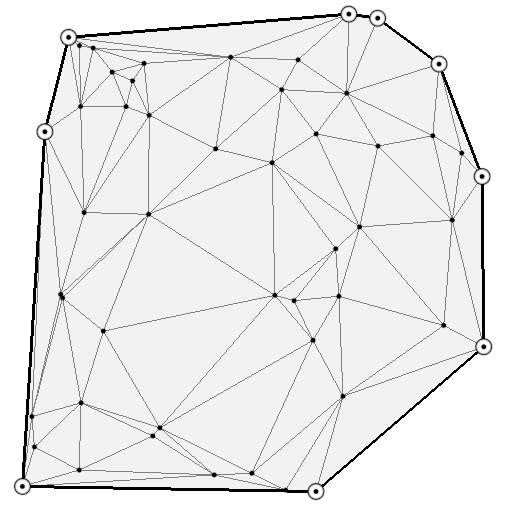

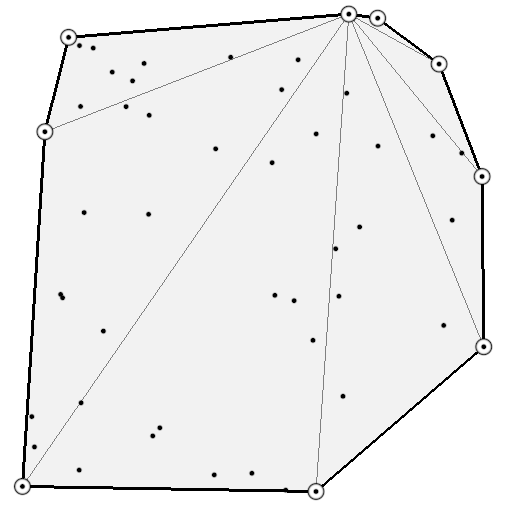

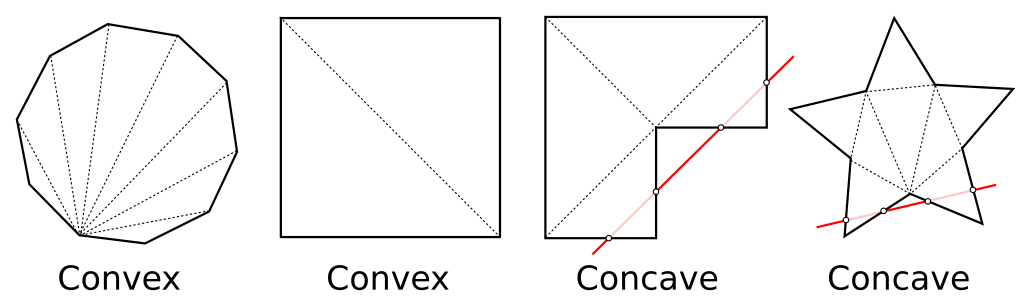

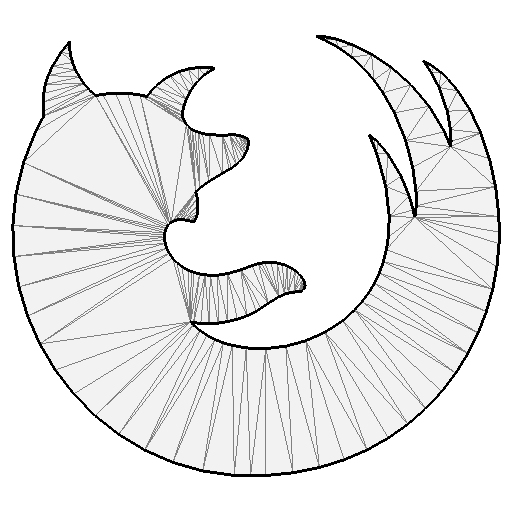

Triangulation

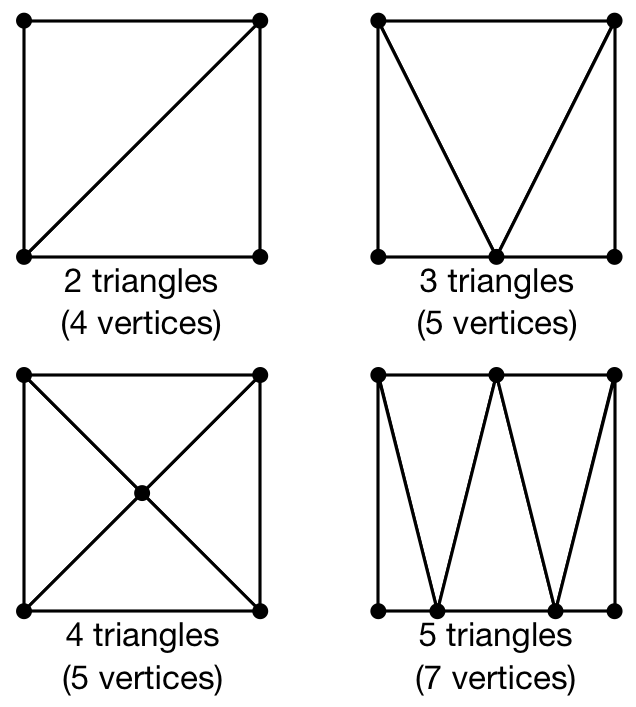

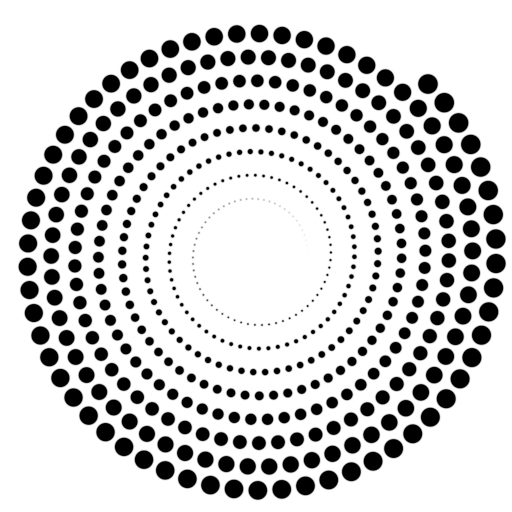

Figure

Triangulation of a surface means to find a set of triangles, which covers a given surface. This can be a tedious process but fortunately, there exist many different methods and algorithms to perform such triangulation automatically for any 2D or 3D surface. The quality of the triangulation is measured in terms of the closeness to the approximated surface, the number of triangles necessary (the smaller, the better) and the homogeneity of the triangles (we prefer to have triangles that have more or less the same size and to not have any degenerated triangle).

In our case, we want to render a square and we need to find the proper triangulation (which is not unique as illustrated on the figure). Since we want to minimize the number of triangles, we'll use the 2 triangles solution that requires only 4 (shared) vertices corresponding to the four corners of the quad. However, you can see from the figure that we could have used different triangulations using more vertices, and later in this book we will just do that (but for a reason).

Considering the NDC, our quad will thus be composed of two triangles:

- One triangle described by vertices

(-1,+1), (+1,+1), (-1,-1) - One triangle described by vertices

(+1,+1), (-1,-1), (+1,-1)

Here we can see that vertices (-1,-1) and (+1,+1) are common to both triangles. So instead of using 6 vertices to describe the two triangles, we can

re-use the common vertices to describe the whole quad. Let's name them:

V₀:(-1,+1)V₁:(+1,+1)V₂:(-1,-1)V₃:(+1,-1)

Our quad can now be using triangle (V₀,V₁,V₂) and triangle (V₁,V₂,V₃). This

is exactly what we need to tell OpenGL.

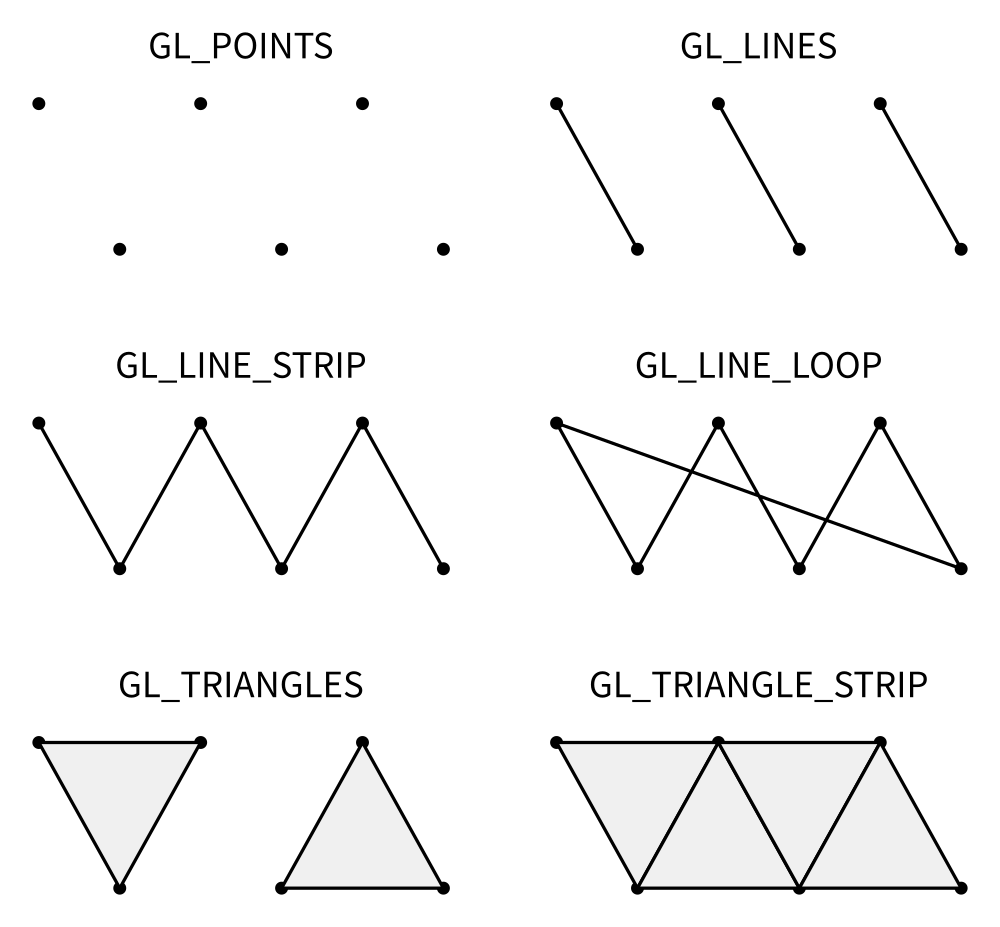

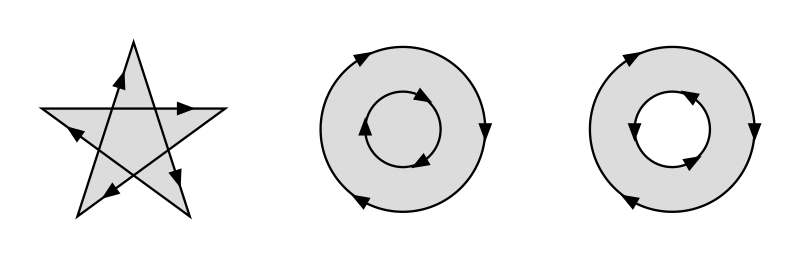

GL Primitives

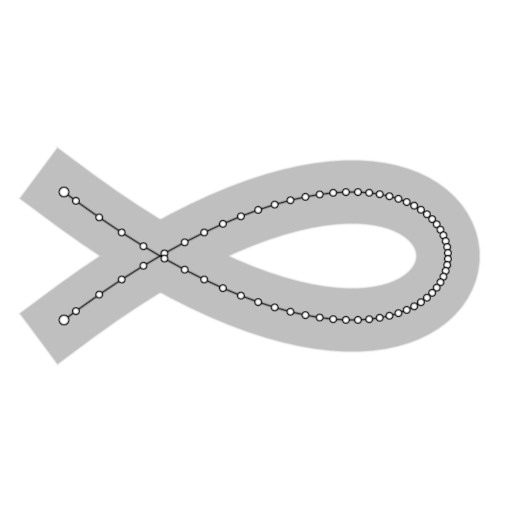

Figure

Ok, now things are getting serious because we need to actually tell OpenGL what to do with the vertices, i.e. how to render them? What do they describe in terms of geometrical primitives? This is quite an important topic since this will determine how fragments will actually be generated as illustrated on the image below:

Mostly, OpenGL knows how to draw (ugly) points, (ugly) lines and (ugly)

triangles. For lines and triangles, there exist some variations depending if

you want to specify very precisely what to draw or if you can take advantage of

some implicit assumptions. Let's consider lines first for example. Given a set

of four vertices (V₀,V₁,V₂,V₃), you migh want to draw segments

(V₀,V₁)``(V₂,V₃) using GL_LINES or a broken line (V₀,V₁,V₂,V₃) using

using GL_LINE_STRIP or a closed broken line (V₀,V₁,V₂,V₃,V₀,) using

GL_LINE_LOOP. For triangles, you have the choices of specifying each triangle

individually using GL_TRIANGLES or you can tell OpenGL that triangles follow

an implicit structure using GL_TRIANGLE_STRIP. For example, considering a set

of vertices (Vᵢ), GL_TRIANGLE_STRIP will produce triangles (Vᵢ,Vᵢ₊₁,Vᵢ₊₂).

There exist other primitives but we won't used them in this book because

they're mainly related to geometry shaders that are not introduced.

If you remember the previous section where we explained that our quad can be

described using using triangle (V₀,V₁,V₂) and triangle (V₁,V₂,V₃), you can

now realize that we can take advantage or the GL_TRIANGLE_STRIP primitive

because we took care of describing the two triangles following this implicit

structure.

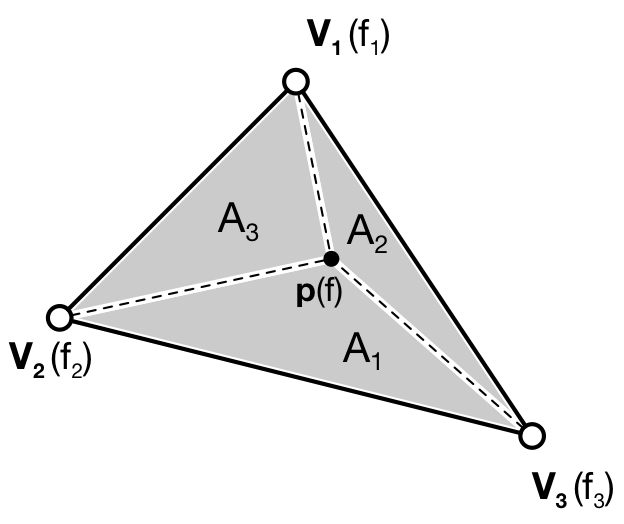

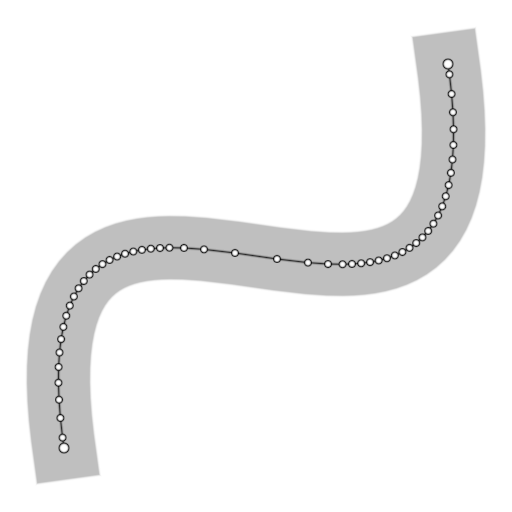

Interpolation

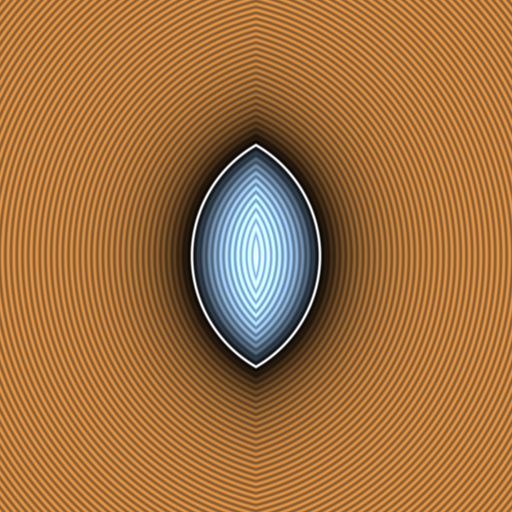

Figure

f of a fragment p is given by f = 𝛌₁f₁ +

𝛌₂f₂ + 𝛌₃f₃The choice of the triangle as the only surface primitive is not an arbitrary

choice, because a triangle offers the possibility of having a nice and intuitive

interpolation of any point that is inside the triangle. If you look back at

the graphic pipeline as it has been introduced in the Modern OpenGL section,

you can see that the rasterisation requires for OpenGL to generate fragments

inside the triangle but also to interpolate values (colors on the figure). One

of the legitimate questions to be solved is then: if I have a triangle

(V₁,V₂,V₃), each summit vertex having (for example) a different color, what is

the color of a fragment p inside the triangle? The answer is barycentric

interpolation

as illustrated on the figure on the right.

More precisely, for any point p inside a triangle A = (V₁,V₂,V₃), we consider

triangles:

A₁ = (P,V₂,V₃)A₂ = (P,V₁,V₃)A₃ = (P,V₁,V₂)

And we can define (using area of triangles):

𝛌₁ = A₁/A𝛌₂ = A₂/A𝛌₃ = A₃/A

Now, if we attach a value f₁ to vertex V₁, f₂ to vertex V₂ and f₃ to

vertex V₃, the interpolated value f of p is given by: f = 𝛌₁f₁ + 𝛌₂f₂ +

𝛌₃f₃ You can check by yourself that if the point p is on a border of the

triangle, the resulting interpolated value f is the linear interpolation of

the two vertices defining the segment the point p belongs to.

This barycentric interpolation is important to understand even if it is done automatically by OpenGL (with some variation to take projection into account). We took the example of colors, but the same interpolation scheme holds true for any value you pass from the vertex shader to the fragment shader. And this property will be used and abused in this book.

The hard way

Having reviewed some important OpenGL concepts, it's time to code our quad example. But, before even using OpenGL, we need to open a window with a valid GL context. This can be done using a toolkit such as Gtk, Qt or Wx or any native toolkit (Windows, Linux, OSX). Unfortunately, the Tk Python interface does not allow to create a GL context and we cannot use it. Note there also exists dedicated toolkits such as GLFW or GLUT and the advantage of GLUT is that it's already installed alongside OpenGL. Even if it is now deprecated, we'll use GLUT since it's a very lightweight toolkit and does not require any extra package. Here is a minimal setup that should open a window with a black background.

import sys import OpenGL.GL as gl import OpenGL.GLUT as glut def display(): gl.glClear(gl.GL_COLOR_BUFFER_BIT) glut.glutSwapBuffers() def reshape(width,height): gl.glViewport(0, 0, width, height) def keyboard( key, x, y ): if key == b'\x1b': sys.exit( ) glut.glutInit() glut.glutInitDisplayMode(glut.GLUT_DOUBLE | glut.GLUT_RGBA) glut.glutCreateWindow('Hello world!') glut.glutReshapeWindow(512,512) glut.glutReshapeFunc(reshape) glut.glutDisplayFunc(display) glut.glutKeyboardFunc(keyboard) glut.glutMainLoop()

Note

You won't have access to any GL command before the glutInit() has been

executed because no OpenGL context will be available before this command is

executed.

The glutInitDisplayMode tells OpenGL what are the GL context properties. At

this stage, we only need a swap buffer (we draw on one buffer while the other

is displayed) and we use a full RGBA 32 bits color buffer (8 bits per channel).

The reshape callback informs OpenGL of the new window size while the

display method tells OpenGL what to do when a redraw is needed. In this

simple case, we just ask OpenGL to swap buffers (this avoids flickering).

Finally, the keyboard callback allows us to exit by pressing the Escape key.

Writing shaders

Now that your window has been created, we can start writing our program, that

is, we need to write a vertex and a fragment shader. For the vertex shader, the

code is very simple because we took care of using the normalized device

coordinates to describe our quad in the previous section. This means vertices

do not need to be transformed. Nonetheless, we have to take care of sending 4D

coordinates even though we'll transmit only 2D coordinates (x,y) or the final

result will be undefined. For coordinate z we'll just set it to 0.0 (but

any value would do) and for coordinate w, we set it to 1.0 (see section

Basic Mathematics for the explanation). Note also the (commented)

alternative ways of writing the shader.

attribute vec2 position; void main() { gl_Position = vec4(position, 0.0, 1.0); // or gl_Position.xyzw = vec4(position, 0.0, 1.0); // or gl_Position.xy = position; // gl_Position.zw = vec2(0.0, 1.0); // or gl_Position.x = position.x; // gl_Position.y = position.y; // gl_Position.z = 0.0; // gl_Position.w = 1.0; }

For the fragment shader, it is even simpler. We set the color to red which is

described by the tuple (1.0, 0.0, 0.0, 1.0) in normalized RGBA

notation. 1.0 for alpha channel means fully opaque.

void main() { gl_FragColor = vec4(1.0, 0.0, 0.0, 1.0); // or gl_FragColor.rgba = vec4(1.0, 0.0, 0.0, 1.0); // or gl_FragColor.rgb = vec3(1.0, 0.0, 0.0); // gl_FragColor.a = 1.0; }

Compiling the program

We wrote our shader and we need now to build a program that will link the vertex and the fragment shader together. Building such program is relatively straightforward (provided we do not check for errors). First we need to request program and shader slots from the GPU:

program = gl.glCreateProgram() vertex = gl.glCreateShader(gl.GL_VERTEX_SHADER) fragment = gl.glCreateShader(gl.GL_FRAGMENT_SHADER)

We can now ask for the compilation of our shaders into GPU objects and we log for any error from the compiler (e.g. syntax error, undefined variables, etc):

vertex_code = """ attribute vec2 position; void main() { gl_Position = vec4(position, 0.0, 1.0); } """ fragment_code = """ void main() { gl_FragColor = vec4(1.0, 0.0, 0.0, 1.0); } """ # Set shaders source gl.glShaderSource(vertex, vertex_code) gl.glShaderSource(fragment, fragment_code) # Compile shaders gl.glCompileShader(vertex) if not gl.glGetShaderiv(vertex, gl.GL_COMPILE_STATUS): error = gl.glGetShaderInfoLog(vertex).decode() print(error) raise RuntimeError("Vertex shader compilation error") gl.glCompileShader(fragment) if not gl.glGetShaderiv(fragment, gl.GL_COMPILE_STATUS): error = gl.glGetShaderInfoLog(fragment).decode() print(error) raise RuntimeError("Fragment shader compilation error")

Then we link our two objects in into a program and again, we check for errors during the process.

gl.glAttachShader(program, vertex) gl.glAttachShader(program, fragment) gl.glLinkProgram(program) if not gl.glGetProgramiv(program, gl.GL_LINK_STATUS): print(gl.glGetProgramInfoLog(program)) raise RuntimeError('Linking error')

and we can get rid of the shaders, they won't be used again (you can think of

them as .o files in C).

gl.glDetachShader(program, vertex) gl.glDetachShader(program, fragment)

Finally, we make program the default program to be ran. We can do it now because we'll use a single program in this example:

gl.glUseProgram(program)

Uploading data to the GPU

Next, we need to build CPU data and the corresponding GPU buffer that will hold

a copy of the CPU data (GPU cannot access CPU memory). In Python, things are

grealty facilitated by NumPy that allows to have a precise control over number

representations. This is important because GLES 2.0 floats have to be exactly

32 bits long and a regular Python float would not work (they are actually

equivalent to a C double). So let us specify a NumPy array holding 4×2

32-bits float that will correspond to our 4×(x,y) vertices:

# Build data data = np.zeros((4,2), dtype=np.float32)

We then create a placeholder on the GPU without yet specifying the size:

# Request a buffer slot from GPU buffer = gl.glGenBuffers(1) # Make this buffer the default one gl.glBindBuffer(gl.GL_ARRAY_BUFFER, buffer)

We now need to bind the buffer to the program, that is, for each attribute present in the vertex shader program, we need to tell OpenGL where to find the corresponding data (i.e. GPU buffer) and this requires some computations. More precisely, we need to tell the GPU how to read the buffer in order to bind each value to the relevant attribute. To do this, GPU needs to know what is the stride between 2 consecutive elements and what is the offset to read one attribute:

1ˢᵗ vertex 2ⁿᵈ vertex 3ʳᵈ vertex …

┌───────────┬───────────┬───────────┬┄┄

┌─────┬─────┬─────┬─────┬─────┬─────┬─┄

│ X │ Y │ X │ Y │ X │ Y │ …

└─────┴─────┴─────┴─────┴─────┴─────┴─┄

offset 0 → │ (x,y) └───────────┘

stride

In our simple quad scenario, this is relatively easy to write because we have a

single attribute ("position"). We first require the attribute location

inside the program and then we bind the buffer with the relevant offset.

stride = data.strides[0] offset = ctypes.c_void_p(0) loc = gl.glGetAttribLocation(program, "position") gl.glEnableVertexAttribArray(loc) gl.glBindBuffer(gl.GL_ARRAY_BUFFER, buffer) gl.glVertexAttribPointer(loc, 2, gl.GL_FLOAT, False, stride, offset)

We're basically telling the program how to bind data to the relevant attribute. This is made by providing the stride of the array (how many bytes between each record) and the offset of a given attribute.

Let's now fill our CPU data and upload it to the newly created GPU buffer:

# Assign CPU data data[...] = (-1,+1), (+1,+1), (-1,-1), (+1,-1) # Upload CPU data to GPU buffer gl.glBufferData(gl.GL_ARRAY_BUFFER, data.nbytes, data, gl.GL_DYNAMIC_DRAW)

Rendering

We're done, we can now rewrite the display function:

def display(): gl.glClear(gl.GL_COLOR_BUFFER_BIT) gl.glDrawArrays(gl.GL_TRIANGLE_STRIP, 0, 4) glut.glutSwapBuffers()

Figure

The 0,4 arguments in the glDrawArrays tells OpenGL we want to display 4

vertices from our current active buffer and we start at vertex 0. You should

obtain the figure on the right with the same red (boring) color. The whole

source ia available from code/chapter-03/glut-quad-solid.py.

All these operations are necessary for displaying a single colored quad on screen and complexity can escalate pretty badly if you add more objects, projections, lighting, texture, etc. This is the reason why we'll stop using the raw OpenGL interface in favor of a library. We'll use the glumpy library, mostly because I wrote it, but also because it offers a tight integration with numpy. Of course, you can design your own library to ease the writing of GL Python applications.

Uniform color

Figure

uniform variable specifying the color of the

quad.In the previous example, we hard-coded the red color inside the fragment shader

source code. But what if we want to change the color from within the Python

program? We could rebuild the program with the new color but that would not be

very efficient. Fortunately there is a simple solution provided by OpenGL:

uniform. Uniforms, unlike attributes, do not change from one vertex to the

other and this is precisely what we need in our case. We thus need to slightly

modify our fragment shader to use this uniform color:

uniform vec4 color; void main() { gl_FragColor = color; }

Of course, we also need to upload a color to this new uniform location and this is easier than for attribute because the memory has already been allocated on the GPU (since the size is know and does not depend on the number of vertices).

loc = gl.glGetUniformLocation(program, "color") gl.glUniform4f(loc, 0.0, 0.0, 1.0, 1.0)

If you run the new code/glut-quad-uniform-color.py example, you should obtain the blue quad as shown on the right.

Varying color

Figure

Until now, we have been using a constant color for the four vertices of our quad and the result is (unsurprisingly) a boring uniform red or blue quad. We can make it a bit more interesting though by assigning different colors to each vertex and see how OpenGL will interpolate colors. Our new vertex shader would need to be rewritten as:

attribute vec2 position; attribute vec4 color; varying vec4 v_color; void main() { gl_Position = vec4(position, 0.0, 1.0); v_color= color; }

We just added our new attribute color but we also added a new variable type:

varying. This type is actually used to transmit a value from the vertex

shader to the fragment shader. As you might have guessed, the varying type

means this value won't be constant over the different fragments but will be

interpolated depending on the relative position of the fragment in the

triangle, as I explained in the Interpolation section. Note that we also

have to rewrite our fragment shader accordingly, but now the v_color will be

an input:

varying vec4 v_color; void main() { gl_FragColor = v_color; }

We now need to upload vertex color to the GPU. We could create a new vertex

dedicated buffer and bind it to the new color attribute, but there is a more

interesting solution. We'll use instead a single numpy array and a single buffer,

taking advantage of the NumPy structured array:

data = np.zeros(4, [("position", np.float32, 2), ("color", np.float32, 4)]) data['position'] = (-1,+1), (+1,+1), (-1,-1), (+1,-1) data['color'] = (0,1,0,1), (1,1,0,1), (1,0,0,1), (0,0,1,1)

Our CPU data structure is thus:

1ˢᵗ vertex 2ⁿᵈ vertex

┌───────────────────────┬───────────────────────┬┄

┌───┬───┬───┬───┬───┬───┬───┬───┬───┬───┬───┬───┬─┄

│ X │ Y │ R │ G │ B │ A │ X │ Y │ R │ G │ B │ A │ …

└───┴───┴───┴───┴───┴───┴───┴───┴───┴───┴───┴───┴─┄

↑ ↑ └───────────────────────┘

position color stride

offset offset

Binding the buffer is now a bit more complicated but it is made relatively easy thanks to NumPy:

stride = data.strides[0] offset = ctypes.c_void_p(0) loc = gl.glGetAttribLocation(program, "position") gl.glEnableVertexAttribArray(loc) gl.glBindBuffer(gl.GL_ARRAY_BUFFER, buffer) gl.glVertexAttribPointer(loc, 2, gl.GL_FLOAT, False, stride, offset) offset = ctypes.c_void_p(data.dtype["position"].itemsize) loc = gl.glGetAttribLocation(program, "color") gl.glEnableVertexAttribArray(loc) gl.glBindBuffer(gl.GL_ARRAY_BUFFER, buffer) gl.glVertexAttribPointer(loc, 4, gl.GL_FLOAT, False, stride, offset)

The easy way

As we've seen in the previous section, displaying a simple quad using modern GL is quite tedious and requires a fair number of operations and this is why, from now on, we'll use glumpy whose goal is to make this process both easy and intuitive.

Glumpy is organized around three main modules:

- The Application layer (

app) package is responsible for opening a window and handling user events such as mouse and keyboard interactions. - The OpenGL object oriented layer (

gloo) package is responsible for handling shader programs and syncing CPU/GPU data through the numpy interface. - The Graphic layer (

graphics) package provides higher-level common objects such as text, collections and widgets.

Those modules will help us writing any OpenGL program quite easily. Let's consider again our quad example:

Note

Glumpy will look for any available backend in a given order, starting by GLFW. I strongly advise to install the GLFW package on your system since this backend is activately maintainted and "just works".

We still need to open a window, but now this is straightforward:

from glumpy import app, gloo, gl # Create a window with a valid GL context window = app.Window()

If necessary, you can also indicate which backend to use by writing

app.use("glfw") before creating the window. The creation of the program is

also straightforward:

# Build the program and corresponding buffers (with 4 vertices) quad = gloo.Program(vertex, fragment, count=4)

With the above line, both the CPU data and GPU data (buffer) have been created

and no extra command is necessary at this stage and uploading the data is only

a matter of setting the different fields of the quad program:

# Upload data into GPU quad['position'] = (-1,+1), (+1,+1), (-1,-1), (+1,-1)

Under the hood, glumpy has parsed your shader programs and has identified

attributes. Rendering is just a matter of calling the draw method from our

shader program, using the proper mode.

# Tell glumpy what needs to be done at each redraw @window.event def on_draw(dt): window.clear() quad.draw(gl.GL_TRIANGLE_STRIP) # Run the app app.run()

The whole source is available in code/chapter-03/glumpy-quad-solid.py.

If you run this program using the --debug switch, you should obtain the

following output that shows what is being done in the background. More

specifically, you can check that the program is actually compiled and linked

using the specified shaders and that the buffer is created and bound to the

program.

[i] HiDPI detected, fixing window size [i] Using GLFW (GL 2.1) [i] Running at 60 frames/second GPU: Creating program GPU: Attaching shaders to program GPU: Creating shader GPU: Compiling shader GPU: Creating shader GPU: Compiling shader GPU: Linking program GPU: Activating program (id=1) GPU: Activating buffer (id=7) GPU: Creating buffer (id=7) GPU: Updating position GPU: Deactivating buffer (id=7) GPU: Deactivating program (id=1)

Uniform color

Adding a uniform specified color is only a matter of modifying the

fragment shader as in the previous section and directly assigning the color to

the quad program (see code/chapter-03/glumpy-quad-uniform-color.py):

quad["color"] = 0,0,1,1

Varying color

Adding a per-vertex color is also only a matter of modifying the fragment shader as in the previous section and directly assigning the color to the quad program (see code/chapter-03/glumpy-quad-varying-color.py):

quad["color"] = (1,1,0,1), (1,0,0,1), (0,0,1,1), (0,1,0,1)

Exercises

Now we can play a bit with the shader and hopefully you'll understand why learning to program the dynamic graphic pipeline is worth the effort. Modifying the rendering is now a matter of writing the proper shader. We'll get a first taste in the three exercises below but we'll see much more powerful shader tricks in the next chapters.

Figure

Scaling the quad We've been using previously a uniform to pass a color to

the fragment shader, but we could have used also in the vertex shader to pass

any kind of information. In this exercise, try to modify the vertex shader in

the varying color example in

order for the quad to be animated and to scale with time as shown in the figure

on the right. You will need to update the scale factor within the Python

program, for example in the draw function.

Solution: code/chapter-03/quad-scale.py

Figure

Rotating the quad Let's now rotate the quad as in the figure on the right.

Note that you have access to the cos and sin functions from within the

shader. If you forgot your geometry, here is a quick reminder for a rotation of

angle theta around the origin (0,0) for a point (x,y):

float x2 = cos(theta)*x - sin(theta)*y; float y2 = sin(theta)*x + cos(theta)*y;

Solution: code/chapter-03/quad-rotation.py

Basic Mathematics

.

There is no way around mathematics. If you want to understand computer geometry, you need to master a few mathematical concepts. But not that many actually. I won't introduce everything since there is already a lot of tutorials online explaining the core concepts of linear algrebra, Euclidean geometry, homogeneous coordinates, projective geometry and quaternions (yes, those are the keywords to enter in your preferred search engine). The teaser image above comes from the Cyclopaedia, an Universal Dictionary of Arts and Sciences published by Ephraim Chambers in London in 1728 (sources History of Geometry).

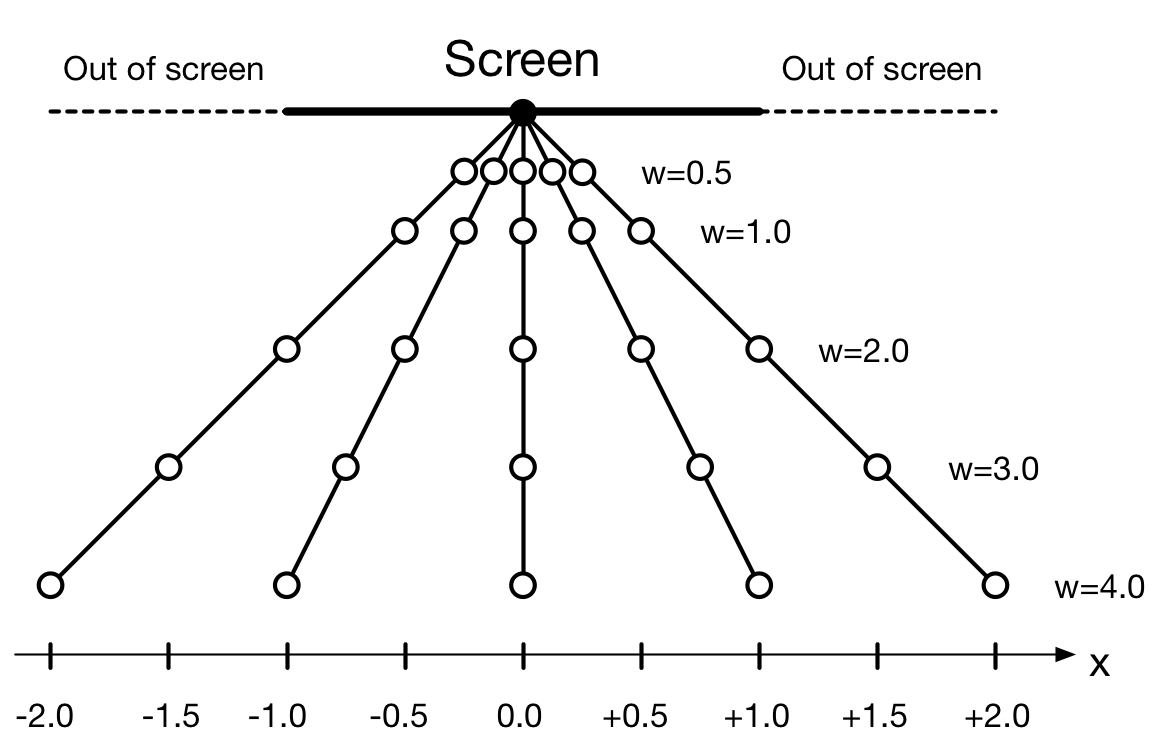

Projective Geometry

Homogeneous coordinates

Even though we're dealing with the three-dimensional Euclidean space, three

dimensional coordinates are actually not the best representation we can use and

this is the reason why we will use homogeneous coordinates that describe

coordinates in a four-dimensional projective space (that includes the Euclidean

space). We'll see in the next section that this allows us to express linear

transformations (rotation, scaling), affine transformations (translations) and

projection using 4×4 matrices. Homogeneous coordinatess are tightly linked with

regular 3D coordinates with the noticeable difference that they require a

fourth w coordinate that corresponds to the fourth dimension, let's call it

the projective dimension. In order to explain it, we'll use a 1-dimensional

space where point coordinates are single scalars indicating the position of the

points onto the X-axis. This will make everything clearer hopefully.

Let us consider for example a simple set of points [-1.0, -0.5, 0.0, +0.5,

+1.0] in this unidimensional space. We want to project onto another segment

[-2,+2] that represents the screen (any point projected outside this segment

is discared and won't be visible into the final projection). The question now is

how do we project the points onto the screen?

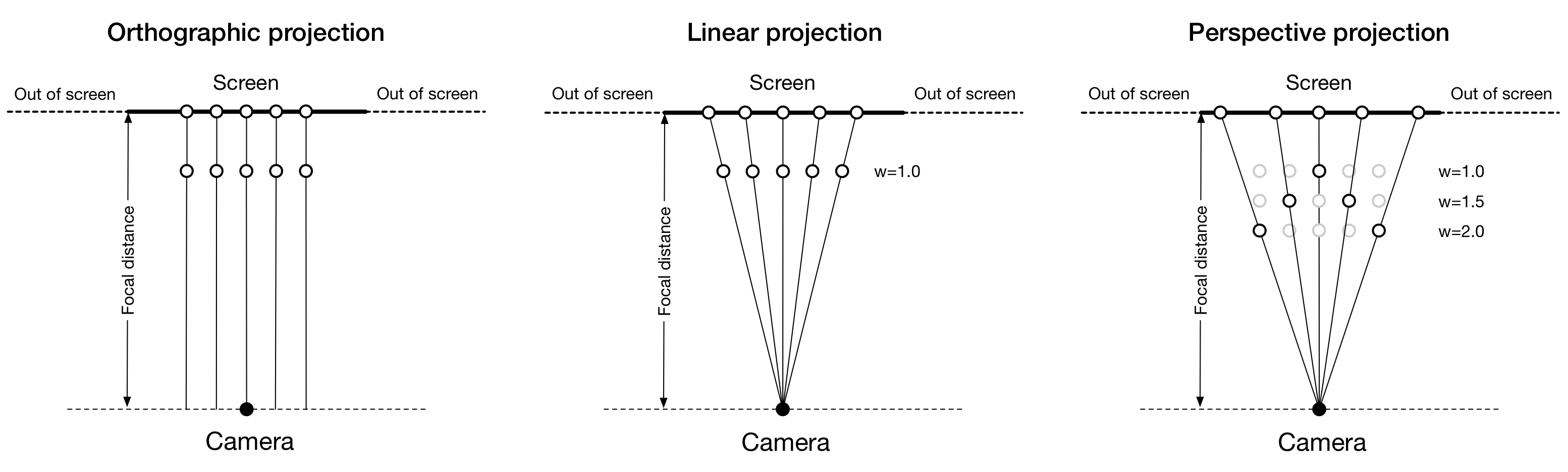

Figure

[-1.0, -0.5, 0.0, +0.5, +1.0].To answer this question, we need to know where is the camera (from where do we

look at the scene) and where are the points positioned relatively to the

screen. This is the reason why we introduce a supplementary w coordinate in

order to indicate the distance to the screen. To go from our Euclidean

representation to our new homogeneous representation, we'll use a conventional

and default value of 1 for all the w such that our new point set is now

[(-1.0,1.0), -(0.5,1.0), (0.0,1.0), (+0.5,1.0), (+1.0,1.0)]. Reciprocally, a

point (x,w) in projective space corresponds to the point x/w (if w ≠ 0)

in our unidimensional Euclidean space. From this conversion, we can see

immediately that there exists actually an infinite set of homogenous coordinates

that correspond to a single Cartesian coordinate as illustrated on the figure.

Figure

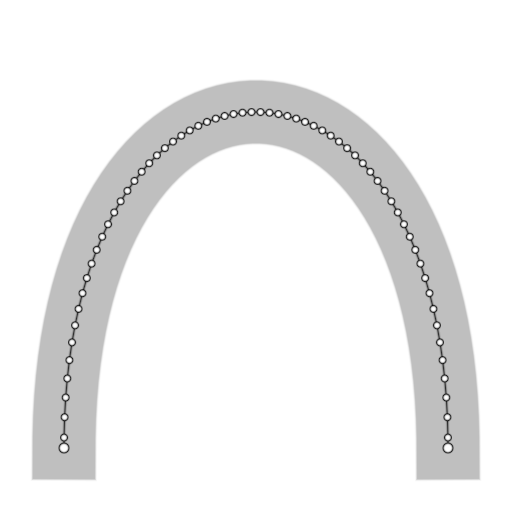

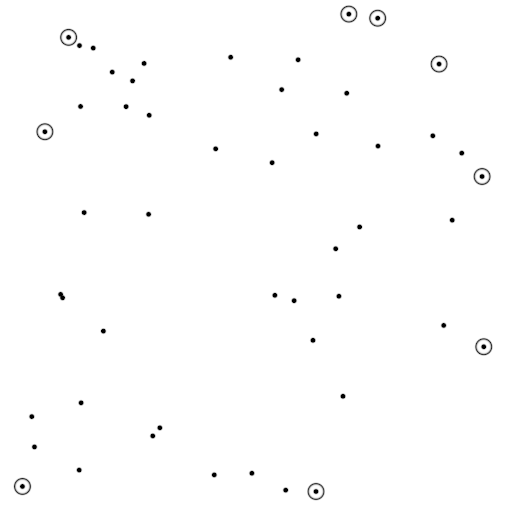

Projections

We are now ready to project our point set onto the screen. As shown on the

figure above, we can use an orthographic (all rays are parallel) or a linear

projection (rays originate from the camera point and hit the screen, passing

through points to be projected). For these two projections, results are similar

but different. In the first case, distances have been exactly conserved while

in the second case, the distance between projected points has increased, but

projected points are still equidistant. The third projection is where

homogenous coordinates make sense. For this (arbitrary) projection, we decided

that the further the point is from the origin, the further away from the

origin its projection will be. To do that, we measure the distance of the point

to the origin and we add this distance to its w value before projecting it

(this corresponds to the black circles on the figure) using the linear

projection. It is to be noted that this new projection does not conserve the

distance relationship and if we consider the set of projected points [P(-1.0),

P(-0.5), P(0.0), P(+0.5), P(+1.0)], we have ║P(-1.0)-P(-0.5)]║ >

║P(-0.5)- P(0.0)║.

Note

Quaternions are not homogenous coordinates even though they are usually represented in the form of a 4-tuple (a,b,c,d) that is a shortcut for the actual representation: a + bi⃗ + cj⃗ + dk⃗, where a, b, c, and d are real numbers, and i⃗, j⃗, k⃗ are the fundamental quaternion units.

Back to our regular 3D-Euclidean space, the principle remains the same and we have the following relationship between Cartesian and homogeneous coordinates:

(x,y,z,w) → (x/w, y/w, z/w) (for w ≠ 0) Homogeneous Cartesian (x,y,z) → (x, y, z, 1) Cartesian Homogeneous

If you didn't understand everything, you can stick to the description provided by Sam Hocevar:

- If w = 1, then the vector (x,y,z,1) is a position in space

- If w = 0, then the vector (x,y,z,0) is a direction

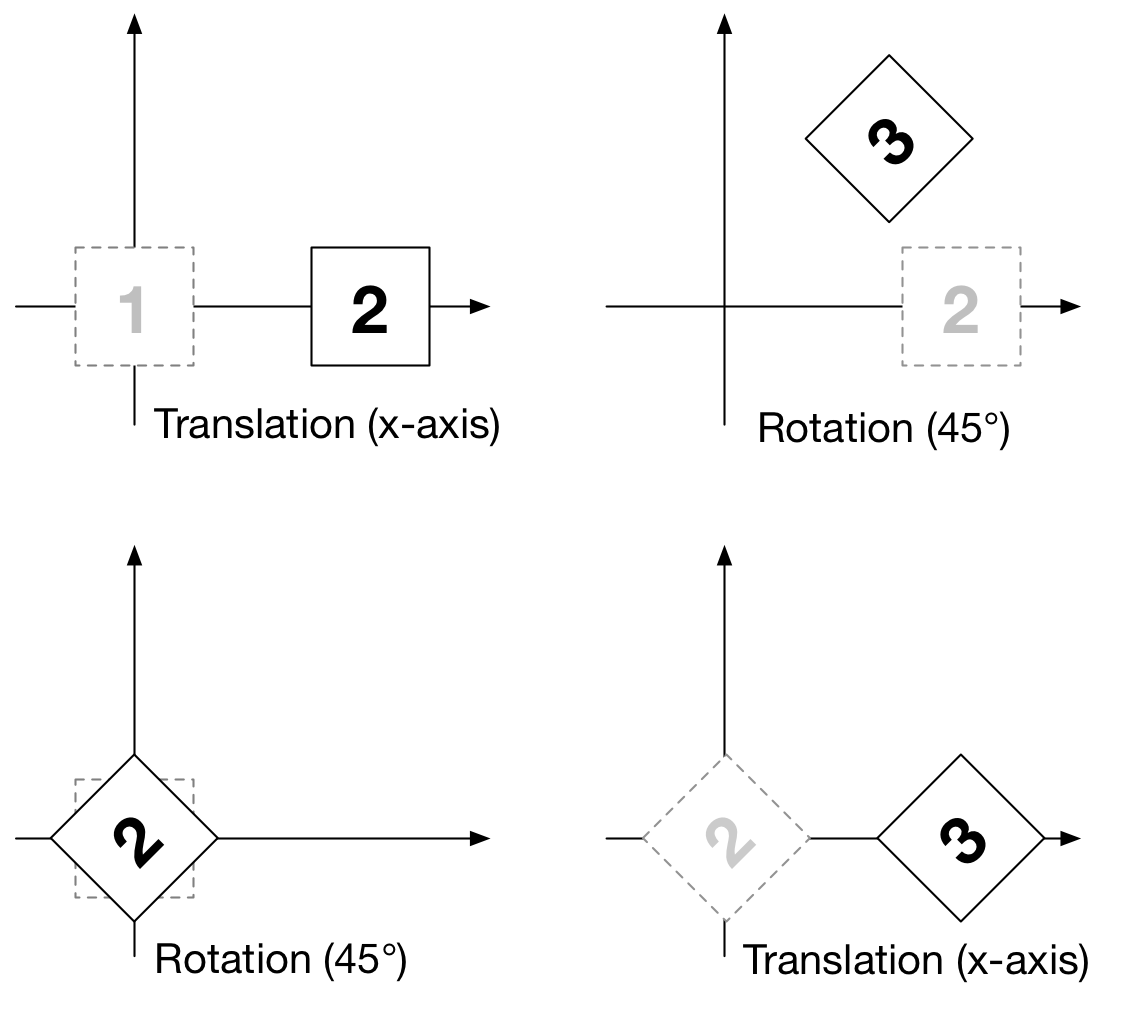

Transformations

Figure

We'll now use homogeneous coordinates and express all our transformations using only 4×4 matrices. This will allow us to chain several transformations by multiplying transformation matrices. However, before diving into the actual definition of these matrices, we need to decide if we consider a four coordinates vector to be 4 rows and 1 column or 1 row and 4 columns. Depending on the answer, the multiplication with a matrix will happen on the left or on the right side of the vector. To be consistent with OpenGL convention, we'll consider a vector to be 4 rows and 1 columns, meaning transformations happen on the left side of vectors. To transform a vertex V by a transformation matrix M, we write: V' = M*V. To chain two transformations M1 and M2 (first M1, then M2), we write: V' = M2*M1*V which is different from V' = M1*M2*V because matrix multiplication is not commutative. As clearly illustrated by the figure on the right, this means for example that a rotation followed by a translation is not the same as a translation followed by a rotation.

Translation

Considering a vertex V = (x, y, z, 1) and a translation vector T = (tx, ty,

tz, 0), the translation of V by T is (x+tx, y+ty, z+tz, 1). The

corresponding matrix is given below:

┌ ┐ ┌ ┐ ┌ ┐ ┌ ┐ │ 1 0 0 tx │ * │ x │ = │ 1*x + 0*y + 0*z + tx*1 │ = │ x+tx │ │ 0 1 0 ty │ │ y │ │ 0*x + 1*y + 0*z + ty*1 │ │ y+ty │ │ 0 0 1 tz │ │ z │ │ 0*x + 0*y + 1*z + tz*1 │ │ z+tz │ │ 0 0 0 1 │ │ 1 │ │ 0*x + 0*y + 0*z + 1*1 │ │ 1 │ └ ┘ └ ┘ └ ┘ └ ┘

Scaling

Considering a vertex V = (x, y, z, 1) and a scaling vector S = (sx, sy, sz,

0), the scaling of V by S is (sx*x, sy*y, sz*z, 1). The corresponding

matrix is given below:

┌ ┐ ┌ ┐ ┌ ┐ ┌ ┐ │ sx 0 0 0 │ * │ x │ = │ sx*x + 0*y + 0*z + 0*1 │ = │ sx*x │ │ 0 sy 0 0 │ │ y │ │ 0*x + sy*y + 0*z + 0*1 │ │ sy*y │ │ 0 0 sz 0 │ │ z │ │ 0*x + 0*y + sz*z + 0*1 │ │ sz*z │ │ 0 0 0 1 │ │ 1 │ │ 0*x + 0*y + 0*z + 1*1 │ │ 1 │ └ ┘ └ ┘ └ ┘ └ ┘

Rotation

A rotation is defined by an axis of rotation A and an angle of rotation d. We defined below only the most common rotations, that is, around the X-axis, Y-axis and Z-axis.

X-axis rotation

┌ ┐ ┌ ┐ ┌ ┐

│ 1 0 0 0 │ * │ x │ = │ 1*x + 0*y + 0*z + 0*1 │

│ 0 cos(d) -sin(d) 0 │ │ y │ │ 0*x + cos(d)*y - sin(d)*z + 0*1 │

│ 0 sin(d) cos(d) 0 │ │ z │ │ 0*x + sin(d)*y + cos(d)*z + 0*1 │

│ 0 0 0 1 │ │ 1 │ │ 0*x + 0*y + 0*z + 1*1 │

└ ┘ └ ┘ └ ┘

┌ ┐

= │ x │

│ cos(d)*y - sin(d)*z │

│ sin(d)*y + cos(d)*z │

│ 1 │

└ ┘

Y-axis rotation

┌ ┐ ┌ ┐ ┌ ┐

│ cos(d) 0 sin(d) 0 │ * │ x │ = │ cos(d)*x + 0*y + sin(d)*z + 0*1 │

│ 0 1 0 0 │ │ y │ │ 0*x + 1*y + 0*z + 0*1 │

│ -sin(d) 0 cos(d) 0 │ │ z │ │ -sin(d)*x + 0*y + cos(d)*z + 0*1 │

│ 0 0 0 1 │ │ 1 │ │ 0*x + 0*y + 0*z + 1*1 │

└ ┘ └ ┘ └ ┘

┌ ┐

= │ cos(d)*x + sin(d)*z │

│ y │

│ -sin(d)*x + cos(d)*z │

│ 1 │

└ ┘

Z-axis rotation

┌ ┐ ┌ ┐ ┌ ┐

│ cos(d) -sin(d) 0 0 │ * │ x │ = │ cos(d)*x - sin(d)*y + 0*z + 0*1 │

│ sin(d) cos(d) 0 0 │ │ y │ │ sin(d)*x + cos(d)*y + 0*z + 0*1 │

│ 0 0 1 0 │ │ z │ │ 0*x + 0*y + 1*z + 0*1 │

│ 0 0 0 1 │ │ 1 │ │ 0*x + 0*y + 0*z + 1*1 │

└ ┘ └ ┘ └ ┘

┌ ┐

= │ cos(d)*x - sin(d)*y │

│ sin(d)*x + cos(d)*y │

│ z │

│ 1 │

└ ┘

A word of caution

OpenGL uses a column-major representation of matrices. This mean that when reading a set of 16 contiguous values in memory, relative to a 4×4 matrix, the first 4 values correspond to the first column while in Numpy (using C default layout), this would correspond to the first row. In order to stay consistent with most OpenGL tutorials, we'll use a column-major order in the rest of this book. This means that any glumpy transformations will appear to be transposed when displayed, but the underlying memory representation will still be consistent with OpenGL and GLSL. This is all you need to know at this stage.

Considering a set of 16 contiguous values in memory:

┌ ┐ │ a b c d e f g h i j k l m n o p │ └ ┘

We get different representations depending on the order convention (column major or row major):

column-major row-major (OpenGL) (NumPy) ┌ ┐ ┌ ┐ ┌ ┐ ┌ ┐ ┌ ┐ │ a e i m │ × │ x │ = │ x y z w │ × │ a b c d │ = │ ax + ey + iz + mw │ │ b f j n │ │ y │ └ ┘ │ e f g h │ │ bx + fy + jz + nw │ │ c g k o │ │ z │ │ i j k l │ │ cx + gy + kz + ow │ │ d h l p │ │ w │ │ m n o p │ │ dx + hy + lz + pw │ └ ┘ └ ┘ └ ┘ └ ┘

For example, here is a translation matrix as returned by the

glumpy.glm.translation function:

import glumpy T = glumpy.glm.translation(1,2,3) print(T) [[ 1. 0. 0. 0.] [ 0. 1. 0. 0.] [ 0. 0. 1. 0.] [ 1. 2. 3. 1.]] print(T.ravel()) [ 1. 0. 0. 0. 0. 1. 0. 0. 0. 0. 1. 0. 1. 2. 3. 1.] ↑ ↑ ↑ 13 14 15

So this means you would use this translation on the left when uploaded to the GPU, but you would use on the right with Python/NumPy:

T = glumpy.glm.translation(1,2,3) V = [3,2,1,1] print(np.dot(V, T)) [ 4. 4. 4. 1.]

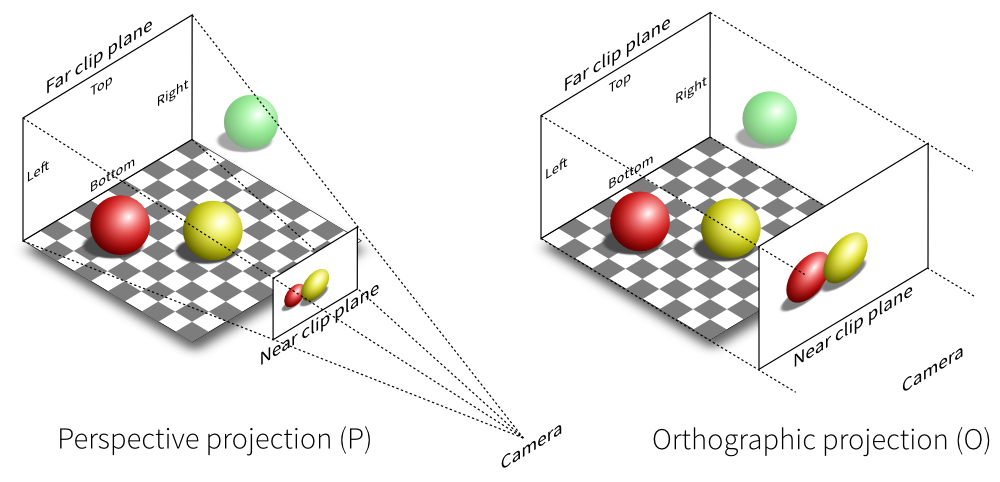

Projections

In order to define a projection, we need to specify first what do we want to view, that is, we need to define a viewing volume such that any object within the volume (even partially) will be rendered while objects outside won't. On the image below, the yellow and red spheres are within the volume while the green one is not and does not appear on the projection.

There exist many different ways to project a 3D volume onto a 2D screen but we'll only use the perspective projection (distant objects appear smaller) and the orthographic projection which is a parallel projection (distant objects have the same size as closer ones) as illustrated on the image above. Until now (previous section), we have been using implicitly an orthographic projection in the z=0 plane.

Depending on the projection we want, we will use one of the two projection matrices below:

Orthographic

┌ ┐ n: near

│ 2/(r-l) 0 0 -((r+l)/(r-l)) │ f: far

│ 0 2/(t-b) 0 -((t+b)/(t-b)) │ t: top

│ 0 0 -2/(f-n) -((f+n)/(f-n)) │ b: bottom

│ 0 0 -1 0 │ l: left

└ ┘ r: right

Orthographic projection

Perspective

┌ ┐ n: near

│ 2n/(r-l) 0 (r+l)/(r-l) 0 │ f: far

│ 0 2n/(t-b) (t+b)/(t-b) 0 │ t: top

│ 0 0 -((f+n)/(f-n)) -(2nf/(f-n)) │ b: bottom

│ 0 0 -1 0 │ l: left

└ ┘ r: right

Perspective projection

At this point, it is not necessary to understand how these matrices were built. Suffice it to say they are standard matrices in the 3D world. Both assume the viewer (=camera) is located at position (0,0,0) and is looking in the direction (0,0,1).

There exists a second form of the perpective matrix that might be easier to manipulate. Instead of specifying the right/left/top/bottom planes, we'll use field of view in the horizontal and vertical direction:

┌ ┐ n: near

│ c/aspect 0 0 0 │ f: far

│ 0 c 0 0 │ c: cotangent(fovy)

│ 0 0 (f+n)/(n-f) 2nf/(n-f) │

│ 0 0 -1 0 │

└ ┘

Perspective projection

where fovy specifies the field of view angle in the y direction

and aspect specifies the aspect ratio that determines the field of view in

the x direction.

Model and view matrices

We are almost done with matrices. You may have guessed that the above matrices require the viewing volume to be in the z direction. We could design our 3D scene such that all objects are within this direction but it would not be very convenient. So instead, we use a view matrix that maps the world space to camera space. This is pretty much as if we were orienting the camera at a given position and look toward a given direction. In the meantime, we can further refine the whole pipeline by providing a model matrix that maps the object's local coordinate space into world space. For example, this is useful for rotating an object around its center. To sum up, we need:

- Model matrix maps from an object's local coordinate space into world space

- View matrix maps from world space to camera space

- Projection matrix maps from camera to screen space

This corresponds to the model-view-projection model. If you have read the whole chapter carefully, you may have guessed the corresponding GLSL shader:

uniform mat4 view; uniform mat4 model; uniform mat4 projection; attribute vec3 P; void main(void) { gl_Position = projection*view*model*vec4(P, 1.0); }

Rendering a cube

.

We now have all the pieces needed to render a simple 3D scene, that is, a rotating cube as shown in the teaser image above. But we first need to create the cube and to tell OpenGL how we want to actually project it on the screen.

Object creation

We need to define what we mean by a cube since there is not such thing as as cube in OpenGL. A cube, when seen from the outside has 6 faces, each being a square. We just saw that to render a square, we need two triangles. So, 6 faces, each of them being made of 2 triangles, we need 12 triangles.

How many vertices? 12 triangles × 3 vertices per triangles = 36 vertices might be a reasonable answer. However, we can also notice that each vertex is part of 3 different faces actually. We'll thus use no more than 8 vertices and tell explicitly OpenGL how to draw 6 faces with them:

V = np.zeros(8, [("position", np.float32, 3)]) V["position"] = [[ 1, 1, 1], [-1, 1, 1], [-1,-1, 1], [ 1,-1, 1], [ 1,-1,-1], [ 1, 1,-1], [-1, 1,-1], [-1,-1,-1]]

These vertices describe a cube centered on (0,0,0) that goes from (-1,-1,-1) to

(+1,+1,+1). Unfortunately, we cannot use gl.GL_TRIANGLE_STRIP as we did for

the quad. If you remember how this rendering primitive considers vertices as a

succession of triangles, you should also realize there is no way to organize

our vertices into a triangle strip that would describe our cube. This means we

have to tell OpenGL explicitly what are our triangles, i.e. we need to

describe triangles in terms of vertex indices (relatively to the V array we

just defined):

I = np.array([0,1,2, 0,2,3, 0,3,4, 0,4,5, 0,5,6, 0,6,1, 1,6,7, 1,7,2, 7,4,3, 7,3,2, 4,7,6, 4,6,5], dtype=np.uint32)

This I is an IndexBuffer that needs to be uploaded to the GPU as well.

Using glumpy, the easiest way is to use a VertexBuffer for vertex data and

an IndexBuffer for index data:

V = V.view(gloo.VertexBuffer) I = I.view(gloo.IndexBuffer)

We can now proceed with the actual creation of the cube and upload the

vertices. Note that we do not specify the count argument because we'll bind

explicitely our own vertex buffer. The vertex and fragment shader sources

are given below.

cube = gloo.Program(vertex, fragment) cube["position"] = V

And we'll use the indices buffer when actually rendering the cube.

Scene setup

The next step is to define the scene. This means we need to say where are our objects located and oriented in space, where is our camera located, what kind of camera we want to use and ultimately, where do we look at. In this simple example, we'll use the model-view-projection model that requires 3 matrices:

model:maps from an object's local coordinate space into world spaceview:maps from world space to camera spaceprojection:maps from camera to screen space

The corresponding vertex shader code is then:

vertex = """ uniform mat4 model; uniform mat4 view; uniform mat4 projection; attribute vec3 position; void main() { gl_Position = projection * view * model * vec4(position,1.0); } """

and we'll keep the fragment shader to a minimum for now (red color):

fragment = """ void main() { gl_FragColor = vec4(1.0, 0.0, 0.0, 1.0); } """

For the projection, we'll use the default perspective camera that is available

from the glumpy.glm module (that also defines ortho, frustum and perspective

matrices as well as rotation, translation and scaling operations). This default

perspective matrix is located at the origin and looks in the negative z

direction with the up direction pointing toward the positive y-axis. If we

leave our cube at the origin, the camera would be inside the cube and we would

not see much. So let's first create a view matrix that is a translation along the

z-axis:

view = np.eye(4,dtype=np.float32) glm.translate(view, 0,0,-5)

Next, we need to define the model matrix and the projection matrix. However,

we'll not setup them right away because the model matrix will be updated in the

on_draw function in order to rotate the cube, while the projection matrix

will be updated as soon as the viewport changes (which is the case when the

window is first created) in the on_resize function.

projection = np.eye(4,dtype=np.float32) model = np.eye(4,dtype=np.float32) cube['model'] = model cube['view'] = view cube['projection'] = projection

In the resize function, we update the projection with a perspective matrix,

taking the window aspect ratio into account. We define the viewing volume

with near=2.0, far=100.0 and field of view of 45°:

@window.event def on_resize(width, height): ratio = width / float(height) cube['projection'] = glm.perspective(45.0, ratio, 2.0, 100.0)

For the model matrix, we want the cube to rotate around its center. We do that

by compositing a rotation about the z axis (theta), then about the y axis (phi):

phi, theta = 0,0 @window.event def on_draw(dt): global phi, theta window.clear() cube.draw(gl.GL_TRIANGLES, I) # Make cube rotate theta += 1.0 # degrees phi += 1.0 # degrees model = np.eye(4, dtype=np.float32) glm.rotate(model, theta, 0, 0, 1) glm.rotate(model, phi, 0, 1, 0) cube['model'] = model

Actual rendering

Figure

We're now alsmost ready to render the whole scene but we need to modify the initialization a little bit to enable depth testing:

@window.event def on_init(): gl.glEnable(gl.GL_DEPTH_TEST)

This is needed because we're now dealing with 3D, meaning some rendered triangles may be behind some others. OpenGL will take care of that provided we declared our context with a depth buffer which is the default in glumpy.

As previously, we'll run the program for exactly 360 frames in order to make an endless animation:

app.run(framerate=60, framecount=360)

Complete source code: code/chapter-05/solid-cube.py

Variations

Colored cube

The previous cube is not very interesting because we used a single color for all the faces and this tends to hide the 3D structure. We can fix this by adding some colors and in the process, we'll discover why glumpy is so useful. To add color per vertex to the cube, we simply define the vertex structure as:

V = np.zeros(8, [("position", np.float32, 3), ("color", np.float32, 4)]) V["position"] = [[ 1, 1, 1], [-1, 1, 1], [-1,-1, 1], [ 1,-1, 1], [ 1,-1,-1], [ 1, 1,-1], [-1, 1,-1], [-1,-1,-1]] V["color"] = [[0, 1, 1, 1], [0, 0, 1, 1], [0, 0, 0, 1], [0, 1, 0, 1], [1, 1, 0, 1], [1, 1, 1, 1], [1, 0, 1, 1], [1, 0, 0, 1]]

And we're done ! Well, actually, we also need to slightly modify the vertex shader since color is now an attribute that needs to be passed to the fragment shader.

vertex = """ uniform mat4 model; // Model matrix uniform mat4 view; // View matrix uniform mat4 projection; // Projection matrix attribute vec4 color; // Vertex color attribute vec3 position; // Vertex position varying vec4 v_color; // Interpolated fragment color (out) void main() { v_color = color; gl_Position = projection * view * model * vec4(position,1.0); } """ fragment = """ varying vec4 v_color; // Interpolated fragment color (in) void main() { gl_FragColor = v_color; } """

Figure

Furthermore, since our vertex buffer fields corresponds exactly to program attributes, we can directly bind it:

cube = gloo.Program(vertex, fragment) cube.bind(V)

But we could also have written

cube = gloo.Program(vertex, fragment) cube["position"] = V["position"] cube["color"] = V["color"]

Complete source code: code/chapter-05/color-cube.py

Outlined cube

Figure

GL_POLYGON_OFFSET_FILL that allows to draw

coincident surfaces properly.We can make the cube a bit nicer by outlining it using black lines. To outline the cube, we need to draw lines between pairs of vertices on each face. 4 lines for the back and front face and 2 lines for the top and bottom faces. Why only 2 lines for top and bottom? Because lines are shared between the faces. So overall we need 12 lines and we need to compute the corresponding indices (I did it for you):

O = [0,1, 1,2, 2,3, 3,0, 4,7, 7,6, 6,5, 5,4, 0,5, 1,6, 2,7, 3,4 ] O = O.view(gloo.IndexBuffer)

We then need to draw the cube twice. One time using triangles and the indices index buffer and one time using lines with the outline index buffer. We need also to add some OpenGL black magic to make things nice. It's not very important to understand it at this point but roughly the idea to make sure lines are drawn "above" the cube because we paint a line on a surface:

@window.event def on_draw(dt): global phi, theta, duration window.clear() # Filled cube gl.glDisable(gl.GL_BLEND) gl.glEnable(gl.GL_DEPTH_TEST) gl.glEnable(gl.GL_POLYGON_OFFSET_FILL) cube['ucolor'] = .75, .75, .75, 1 cube.draw(gl.GL_TRIANGLES, I) # Outlined cube gl.glDisable(gl.GL_POLYGON_OFFSET_FILL) gl.glEnable(gl.GL_BLEND) gl.glDepthMask(gl.GL_FALSE) cube['ucolor'] = 0, 0, 0, 1 cube.draw(gl.GL_LINES, O) gl.glDepthMask(gl.GL_TRUE) # Rotate cube theta += 1.0 # degrees phi += 1.0 # degrees model = np.eye(4, dtype=np.float32) glm.rotate(model, theta, 0, 0, 1) glm.rotate(model, phi, 0, 1, 0) cube['model'] = model

Complete source code: code/chapter-05/outlined-cube.py

Textured cube

Figure

For making a textured cube, we need a texture (a.k.a. an image) and some coordinates to tell OpenGL how to map it to the cube faces. Texture coordinates are normalized and should be inside the [0,1] range (actually, texture coordinates can be pretty much anything but for the sake of simplicity, we'll stick to the [0,1] range). Since we are displaying a cube, we'll use one texture per side and the texture coordinates are quite easy to define: [0,0], [0,1], [1,0] and [1,1]. Of course, we have to take care of assigning the right texture coordinates to the right vertex or you texture will be messed up.

Furthemore, we'll need some extra work because we cannot share anymore our vertices between faces since they won't share their texture coordinates. We thus need to have a set of 24 vertices (6 faces × 4 vertices). We'll use the dedicated function below that will take care of generating the right texture coordinates.

def cube(): vtype = [('position', np.float32, 3), ('texcoord', np.float32, 2)] itype = np.uint32 # Vertices positions p = np.array([[1, 1, 1], [-1, 1, 1], [-1, -1, 1], [1, -1, 1], [1, -1, -1], [1, 1, -1], [-1, 1, -1], [-1, -1, -1]], dtype=float) # Texture coords t = np.array([[0, 0], [0, 1], [1, 1], [1, 0]]) faces_p = [0, 1, 2, 3, 0, 3, 4, 5, 0, 5, 6, 1, 1, 6, 7, 2, 7, 4, 3, 2, 4, 7, 6, 5] faces_t = [0, 1, 2, 3, 0, 1, 2, 3, 0, 1, 2, 3, 3, 2, 1, 0, 0, 1, 2, 3, 0, 1, 2, 3] vertices = np.zeros(24, vtype) vertices['position'] = p[faces_p] vertices['texcoord'] = t[faces_t] filled = np.resize( np.array([0, 1, 2, 0, 2, 3], dtype=itype), 6 * (2 * 3)) filled += np.repeat(4 * np.arange(6, dtype=itype), 6) vertices = vertices.view(gloo.VertexBuffer) filled = filled.view(gloo.IndexBuffer) return vertices, filled

Now, inside the fragment shader, we have access to the texture:

vertex = """

uniform mat4 model; // Model matrix

uniform mat4 view; // View matrix

uniform mat4 projection; // Projection matrix

attribute vec3 position; // Vertex position

attribute vec2 texcoord; // Vertex texture coordinates

varying vec2 v_texcoord; // Interpolated fragment texture coordinates (out)

void main()

{

// Assign varying variables

v_texcoord = texcoord;

// Final position

gl_Position = projection * view * model * vec4(position,1.0);

} """

fragment = """

uniform sampler2D texture; // Texture

varying vec2 v_texcoord; // Interpolated fragment texture coordinates (in)

void main()

{

// Get texture color

gl_FragColor = texture2D(texture, v_texcoord);

} """

Complete source code: code/chapter-05/textured-cube.py

Exercises

Figure

Shader outline We've seen in the section outlined cube how to draw a thin line around the cube to enhance its shape. For this, we drew the cube twice, one for the cube itself and a second time for the outline. However, it is possible to get more or less the same results from within the shader in a single pass. The trick is to pass the (untransformed) position from the vertex shader to the fragment shader and to use this information to set the color of the fragment to either the black color or the v_color. Starting from the color cube code, try to modify only the shader code (both vertex and fragment) to achieve the result on the right.

Solution: code/chapter-05/border-cube.py

Figure

Hollow cube We can play a bit more with the shader and try to draw only a

thick border surrounded by black outline. For the "transparent" part, you'll

need to use the discard instruction from within the fragment shader that

instructs OpenGL to not display the fragment at all and to terminate the

program from this shader. Since nothing will be rendered, there is no need to

process the rest of program.

Solution: code/chapter-05/hollow-cube.py

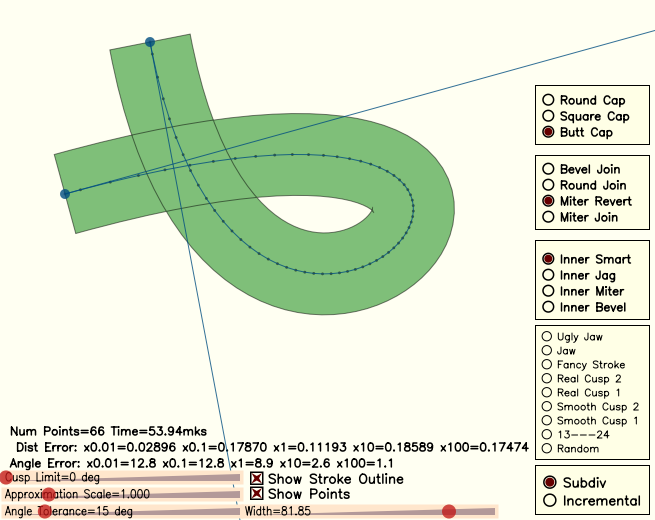

Anti-grain geometry

.

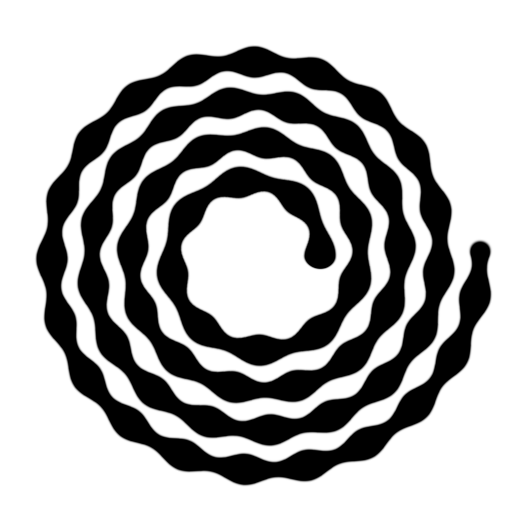

The late Maxim Shemanarev (1966-2013) designed the anti-grain library, a (very) high quality rendering engine written in C++. The library is both nicely written (one of the best C++ library I've seen with the Eigen library) and heavily documented, but the strongest feature is the quality of the rendering output that is probably one of the best, even 10 years after the library has been released (have look at the demos). This is the level of quality we target in this book. However, OpenGL anti-aliasing techniques (even latest ones) won't do the job and we'll need to take care of pretty much everything.

Antialiasing

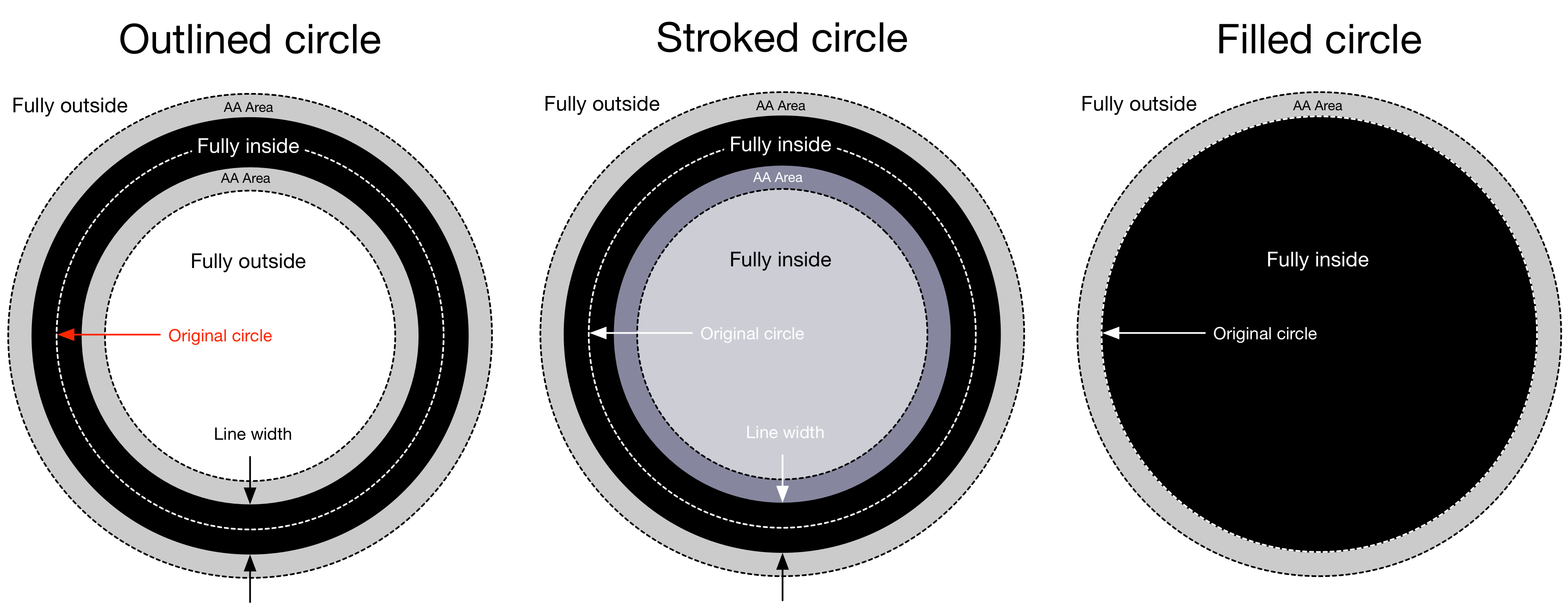

Figure

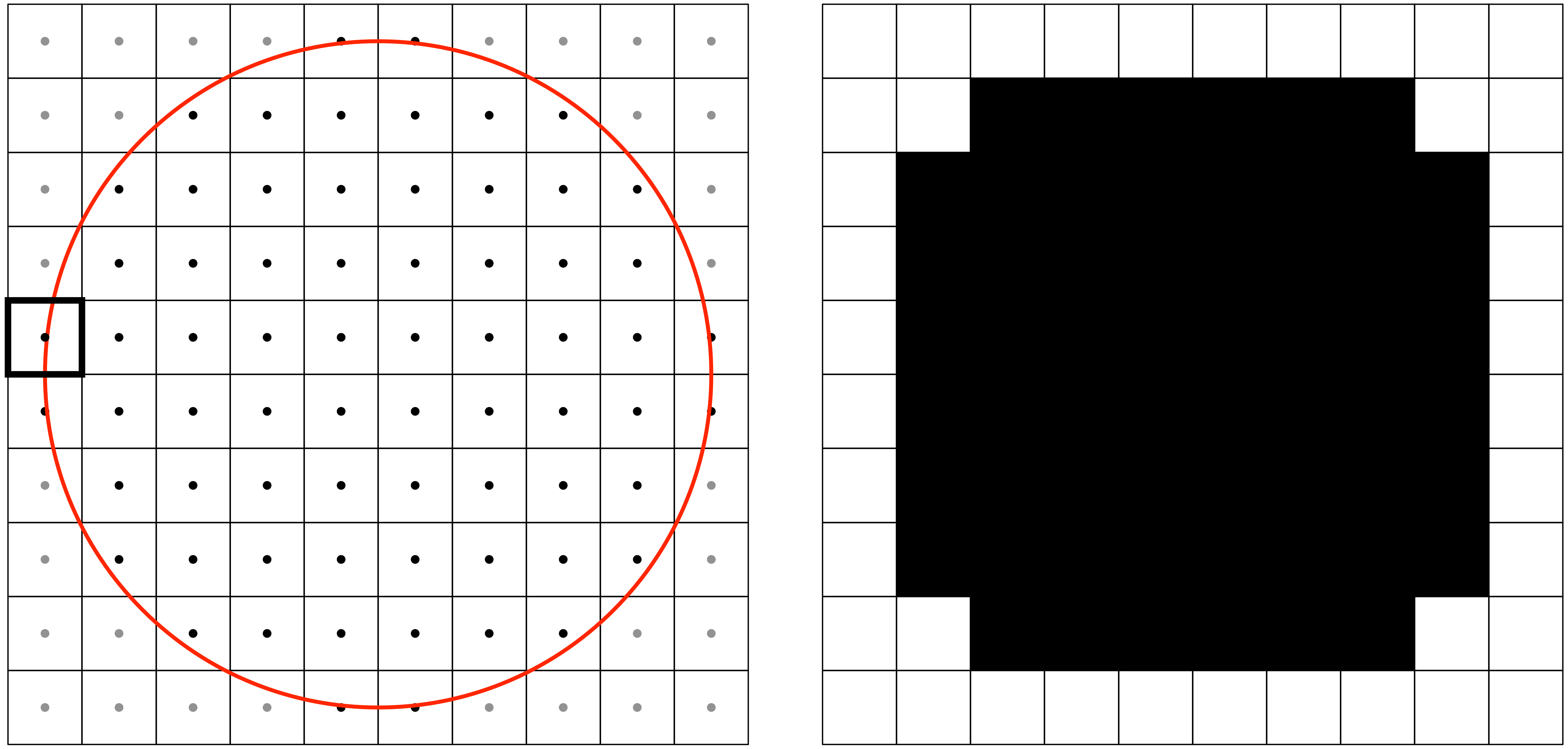

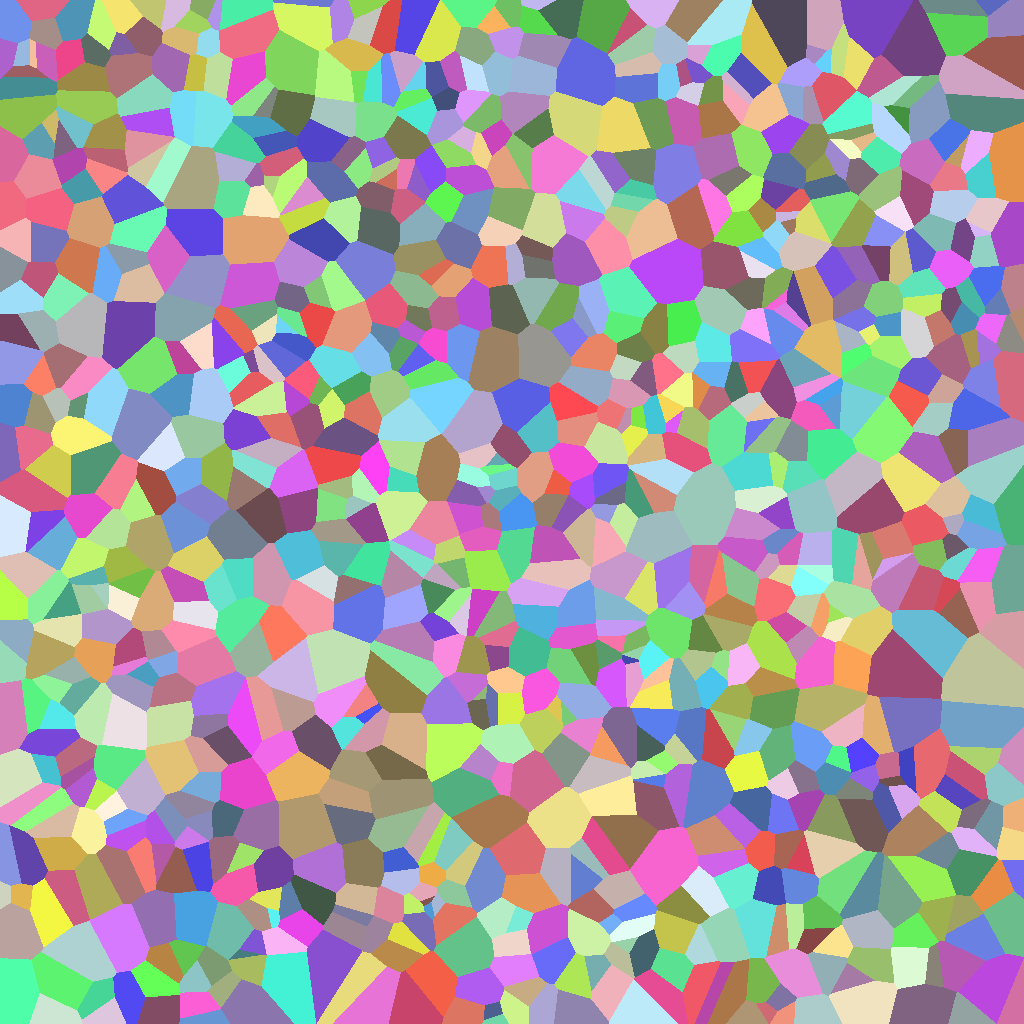

Aliasing is a well known problem in signal processing where it can occur in time (temporal aliasing) or in space (spatial aliasing). In computer graphics, we're mostly interested in spatial aliasing (such a Moiré pattern or jaggies) and the way to attenuate it. Let's first examine the origin of the problem from a practical point of view (have a look at wikipedia for the background theory).

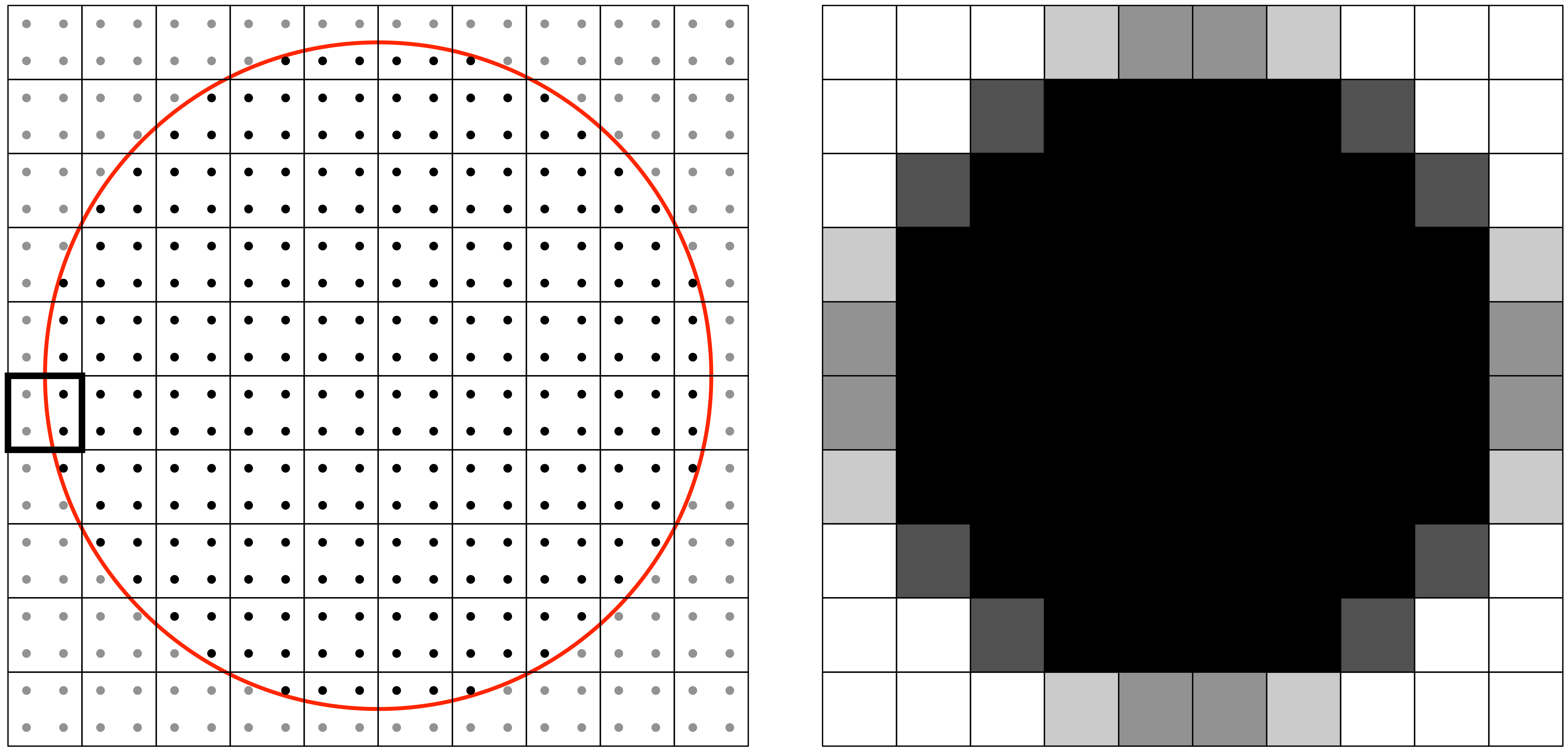

The figure on the right illustrates the problem when we want to render a disc

onto a small area. The very first thing to be noticed is that pixels are

not mathematical points and the center of the pixel is usually associated

with the center of the pixel. This means that if we consider a pair of integer

coordinates (i,j), then (i+Δx, j+Δy) designates the same pixel (with -0.5

< Δx, Δy < 0.5). In order to rasterize the mathematical description of our

circle (center and radius), the rasterizer examines the center of each pixel to

determine if it falls inside or outside the shape. The result is illustrated on

the right part of the figure. Even if the center of a pixel is very close but

outside of the circle, it is not painted as it is shown for the thicker square

on the figure. More generally, without anti-aliasing, a pixel will be only on

(inside) or off (outside), leading to very hard jagged edges and a very

approximate shape for small sizes as well.

Sample based methods

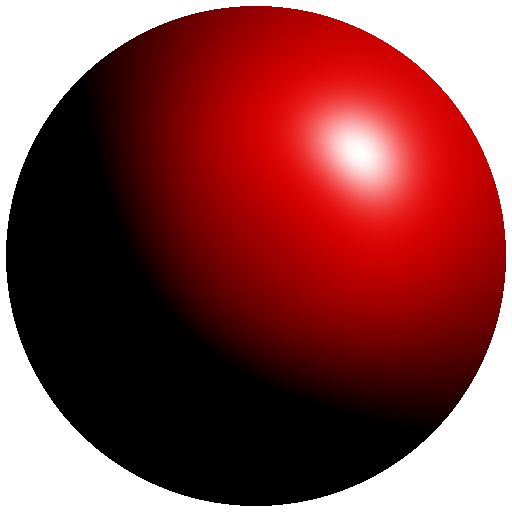

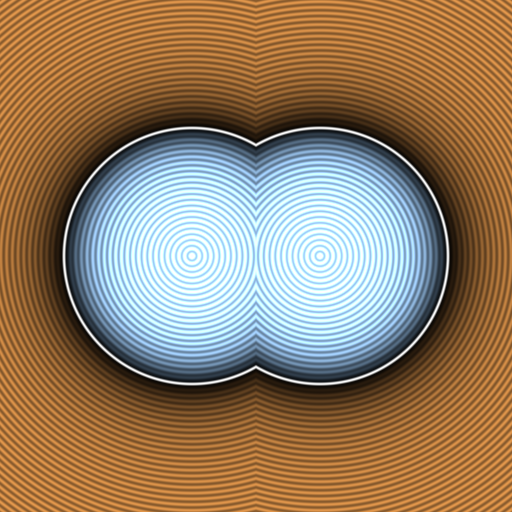

Figure

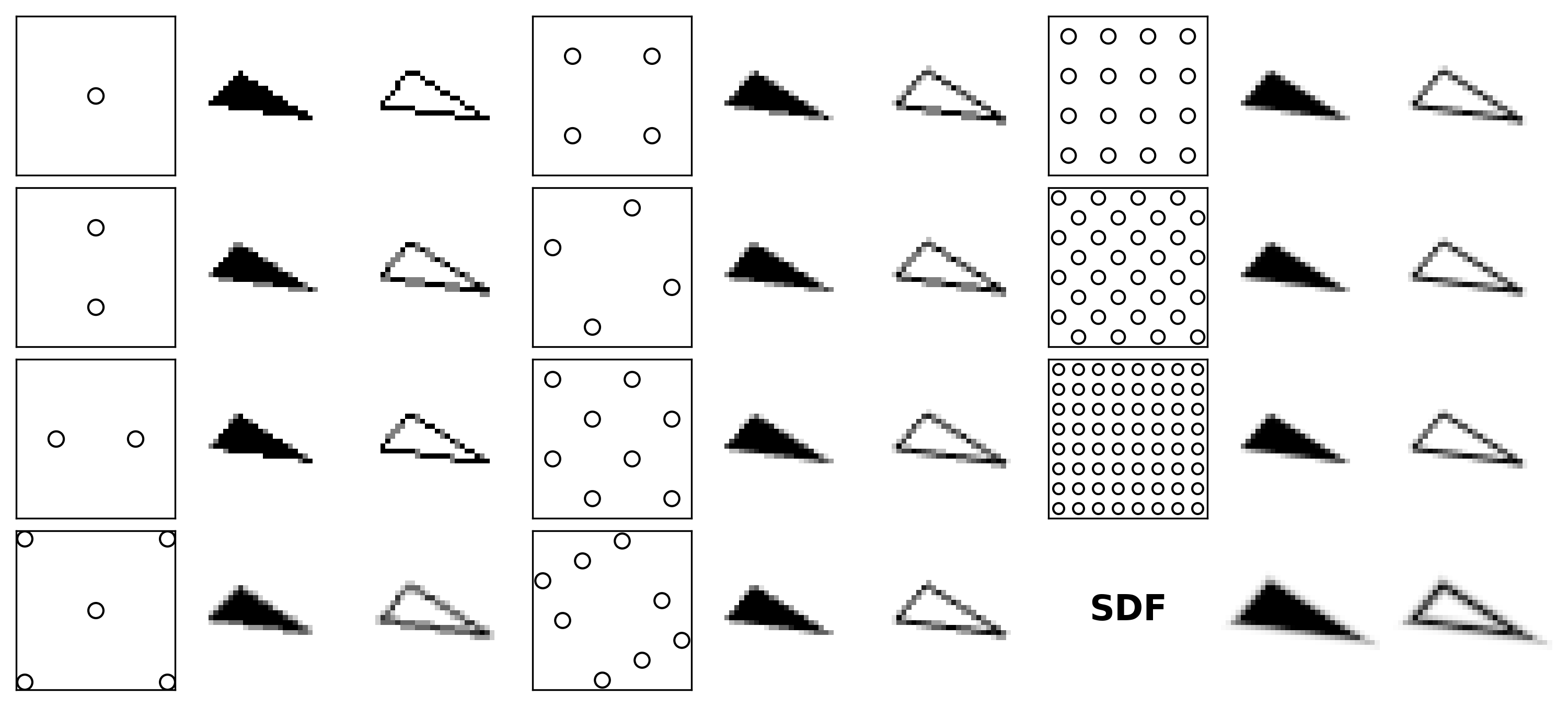

One of the simplest method to remove antialising consists in using several samples to estimate the final color of a fragment. Instead of only considering the center of the pixel, one case use several samples over the whole surface of a pixel in order to have a better estimate as shown on the figure on the right. A fragment that was previously considered outside, based on its center only, can now be considered half inside / half outside. This multi-sampling helps to attenuate the jagged edges we've seen in the previous section. On the figure, we used a very simple and straightforward multi-sample method, assigning fixed and equidistant locations for the four subsamples. There exist however better methods for multi-sampling as shown by the impressive list of sample based antialiasing techniques:

- SSAA: Supersampling antialiasing

- MSAA: Multisample antialiasing

- FSAA: Full screen anti-aliasing

- FXAA: Fast approximate antialiasing

- SMAA: Subpixel morphological antialiasing

- DLAA: Directionally localized antialiasing

- NFAA: Normal filter antialiasing

- HRAA: High-Resolution antialiasing

- TXAA: Temporal antialiasing

- EQAA: Enhanced quality at-prntialiasing

- CSAA: Coverage Sample antialiasing

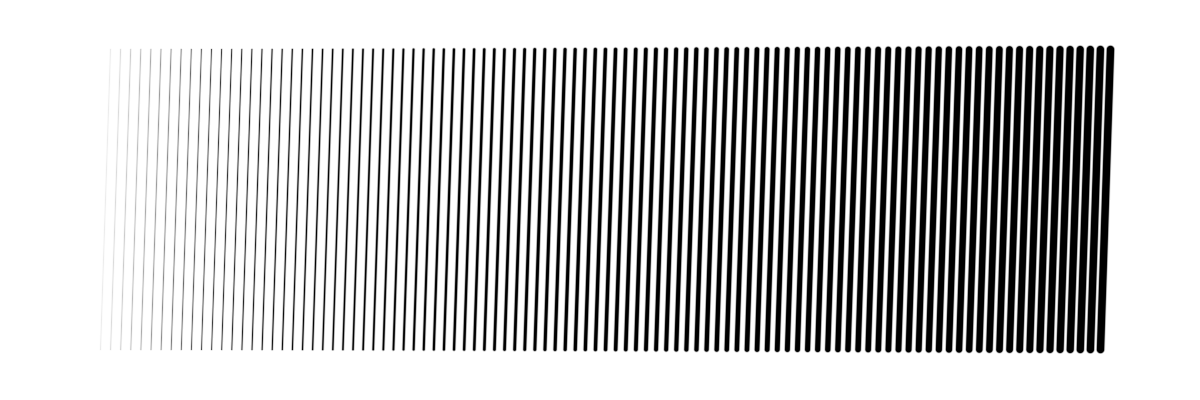

Depending on the performance you need to achieve (in terms of rendering quality and speed) one method might be better than the other. However, you cannot expect to achieve top quality due to inherent limitations of all these methods. If they are great for real-time rendering such as video games (and some of them are really good), they are hardly sufficient for any scientific visualization as illustrated on the figure below.

Figure

This is the reason why we won't use them in the rest of this book. If you want more details on these techniques, you can have a look at this reddit discussion explaining antialiasing modes or this nice overview of MSAA

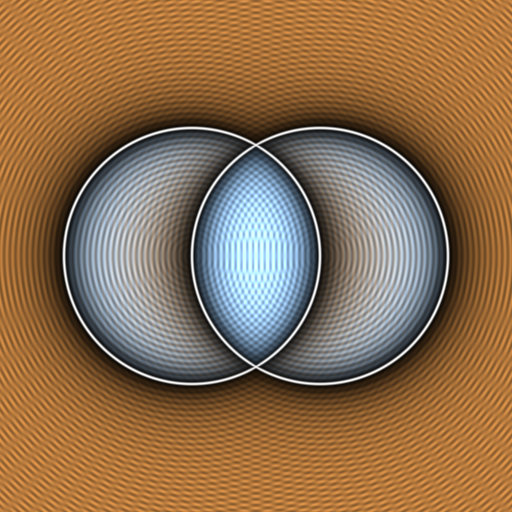

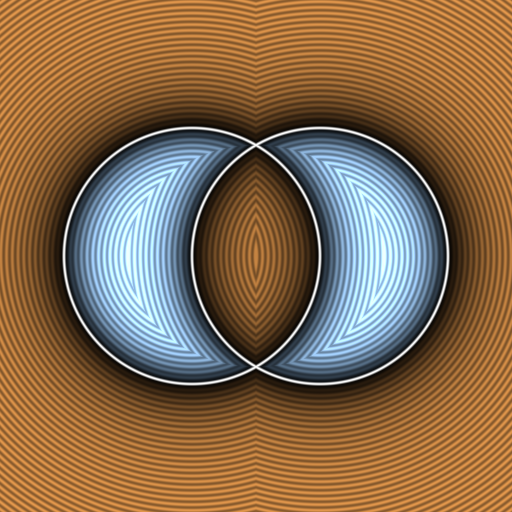

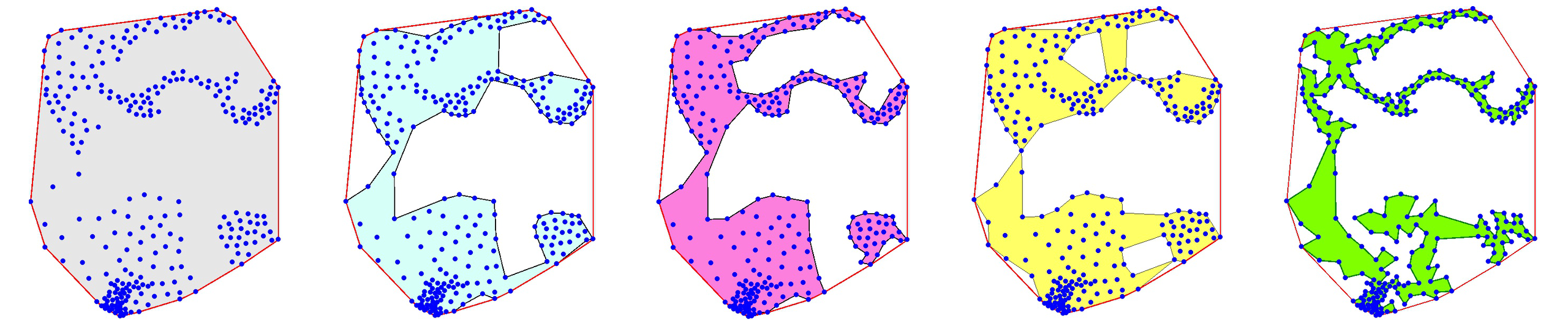

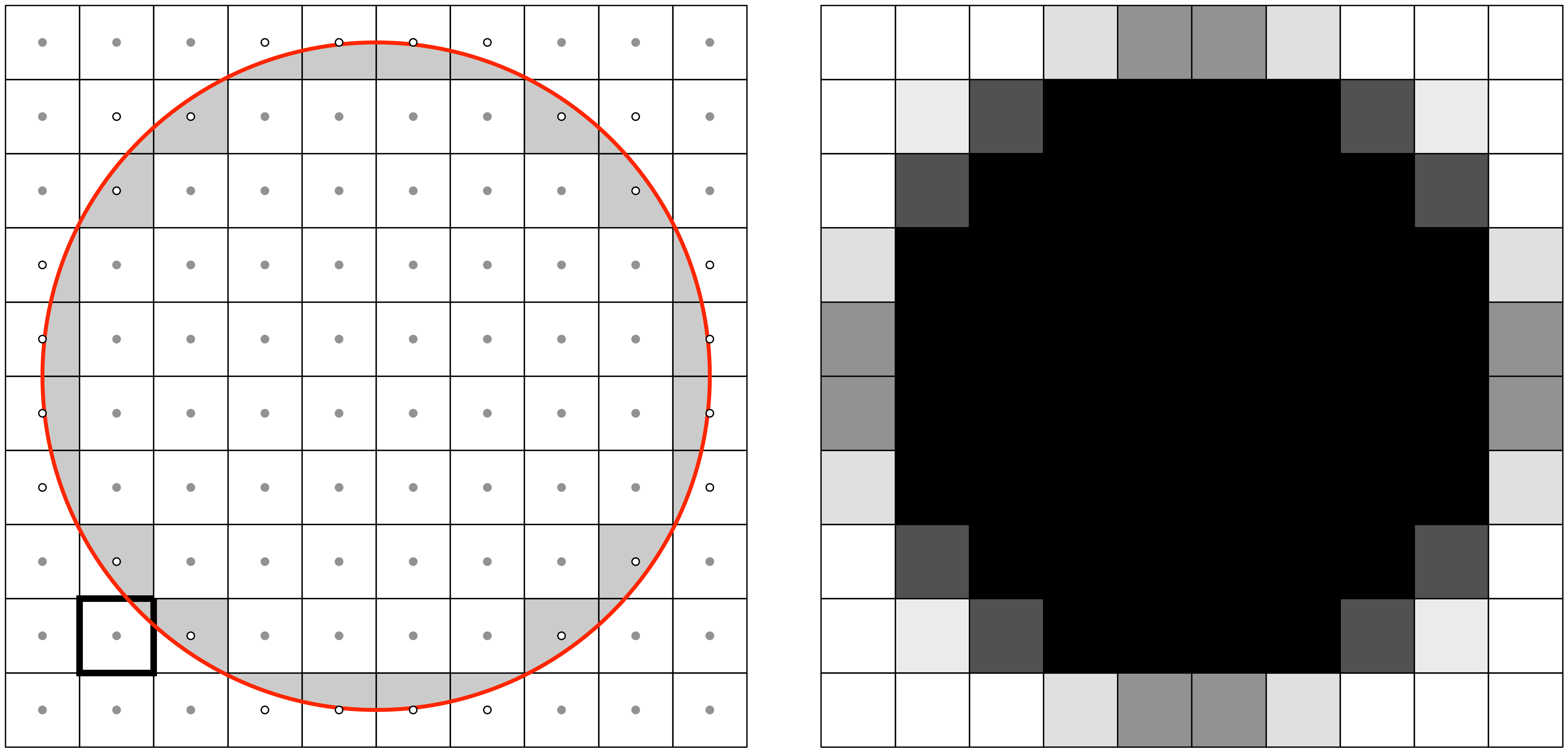

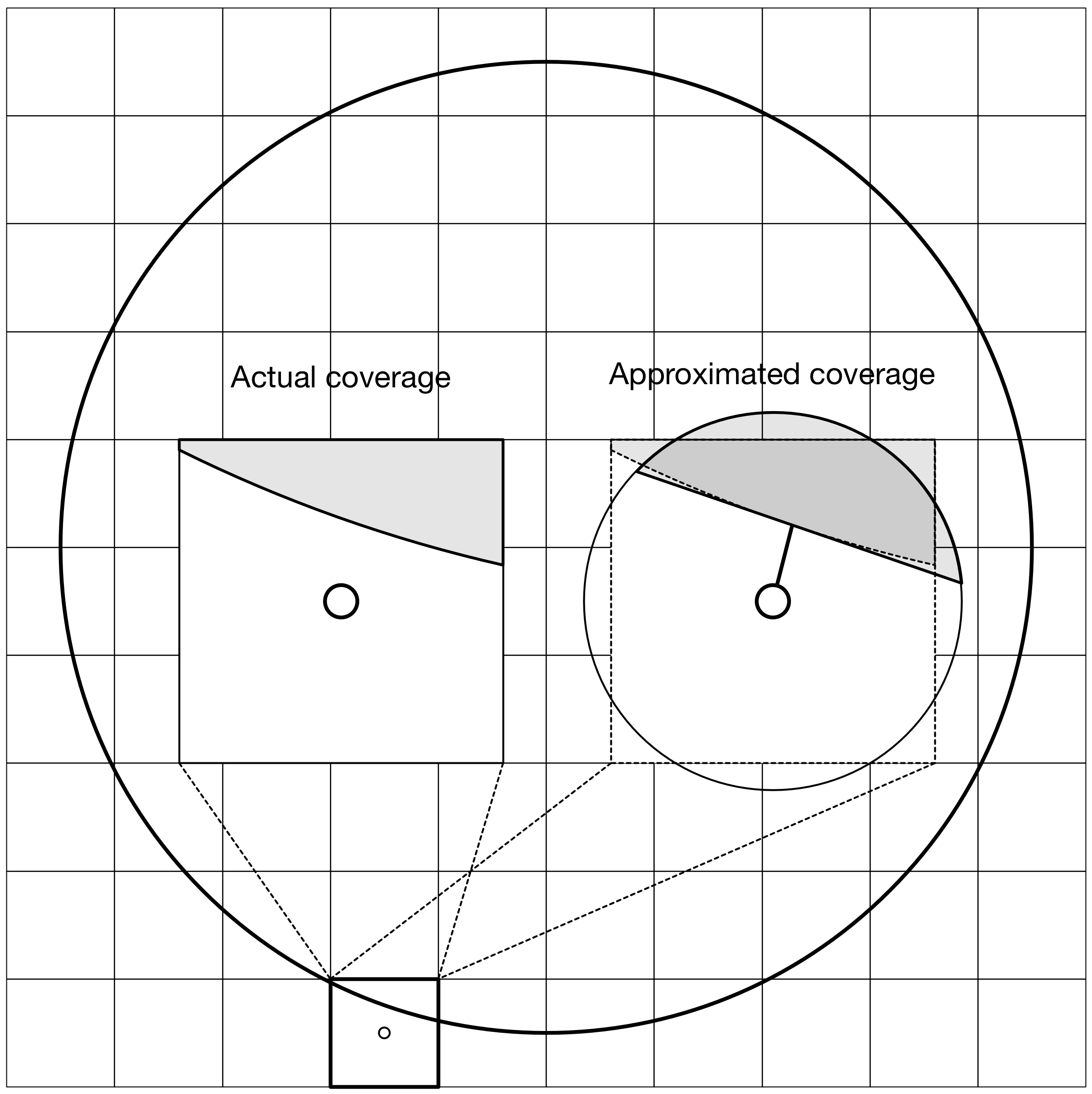

Coverage methods

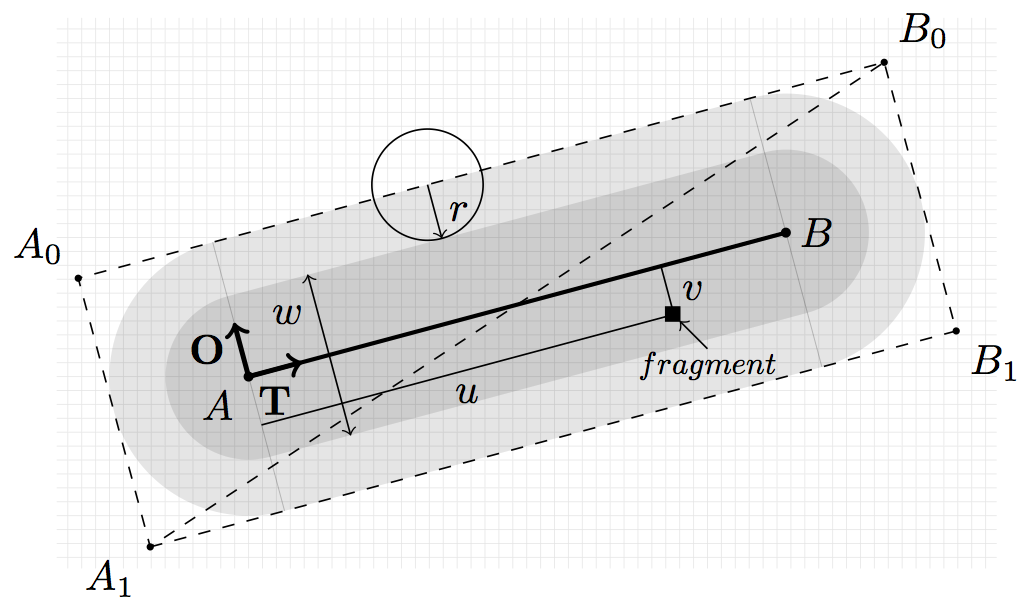

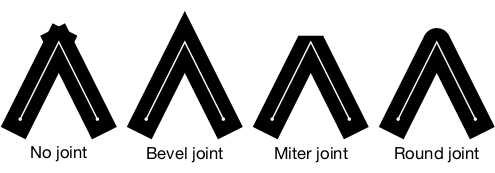

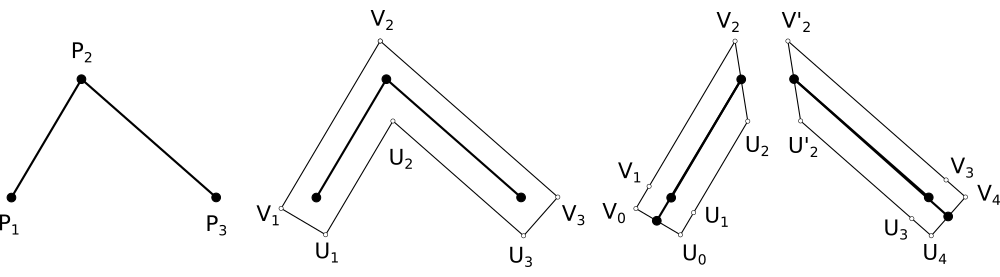

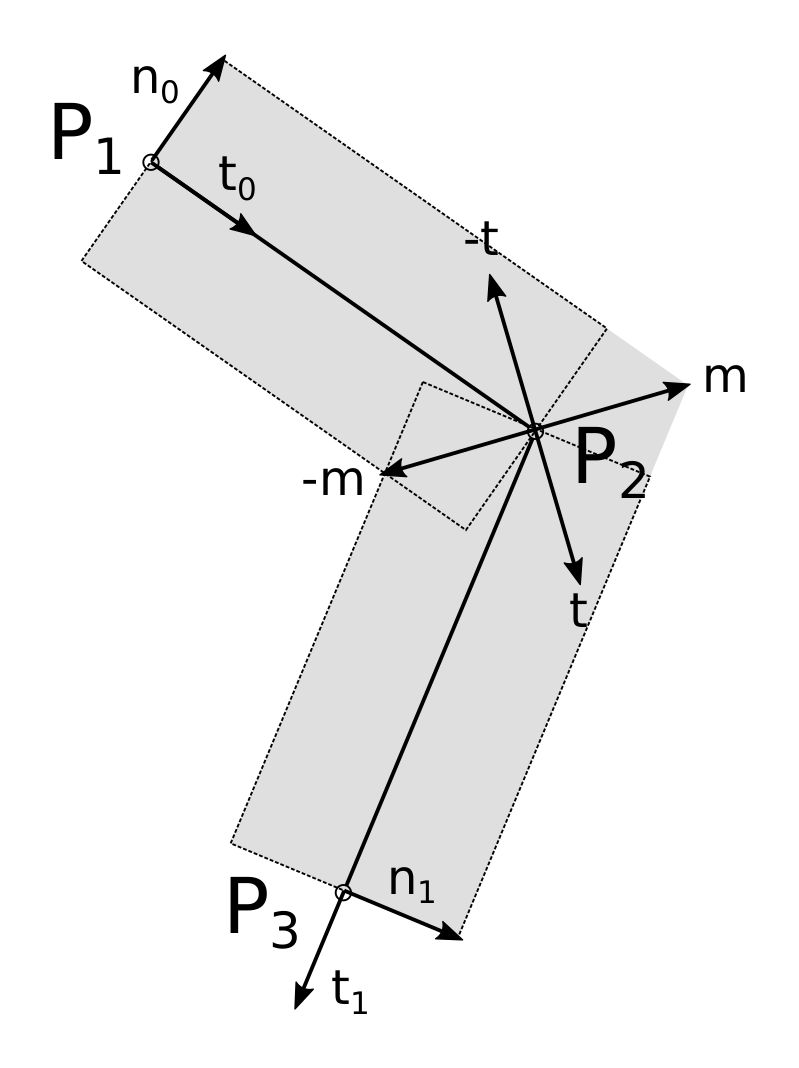

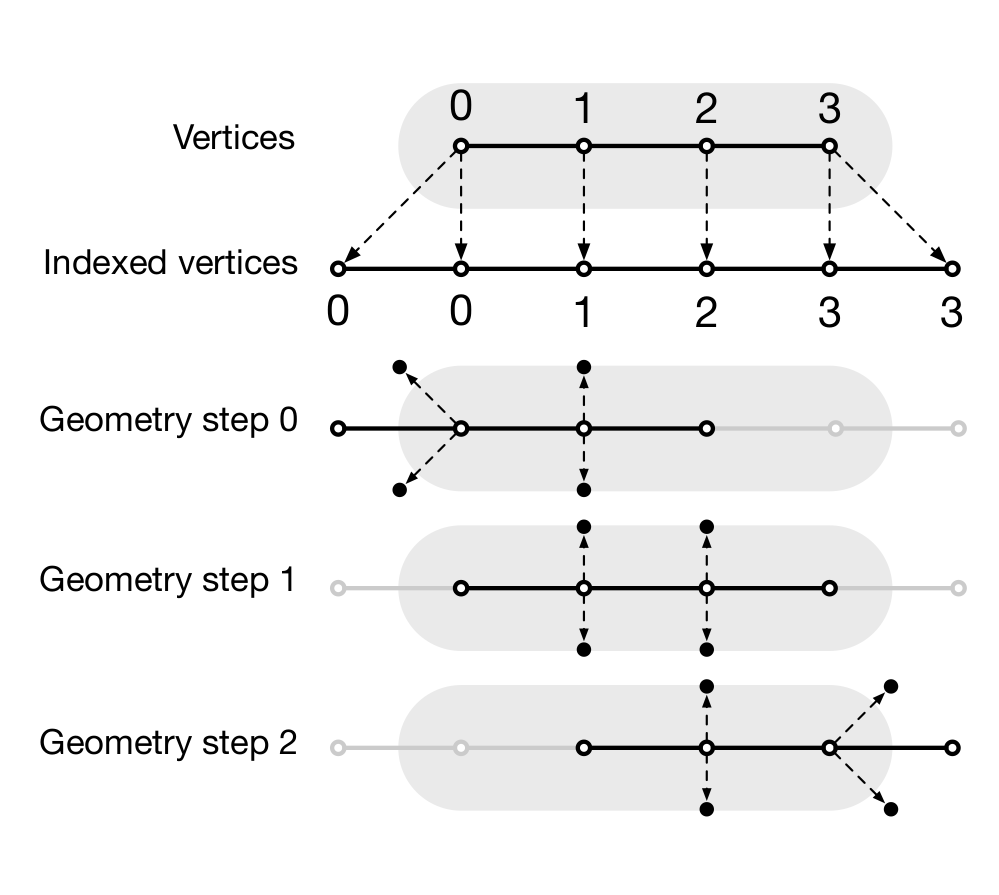

Figure